RESEARCH WORK

EmotiCal: tracking emotional wellbeing

Track your mood

Introduction

Some context and description

While current personal informatics systems provide rich records of our pasts, they typically fail to convert these records into actionable future plans. To address this, the research team at the UCSC HCI Lab lead by Ph.D. student Victoria H., made EmotiCal, an emotion forecasting system, to improve users’ everyday moods and promote well-being.

Some Context

I was assisting another HCI Lab Ph.D. student, Ryan C., with research on identifying members of online communities' social roles when I was stolen to another team to add design and developments to EmotiCal.

Click here to check out the UCSC HCI Lab!

UC Santa Cruz Human Computer Interaction Lab

Sep 2017 - March 2018

User Research, UI/UX Design, Development

Victoria H., Cindy T., Alon P.

Sketch, Invision, Balsamiq, Figma

Overview

Mechanical Emotions

What EmotiCal eats for breakfast

Purpose

EmotiCal was built as part of a research project to explore algorithmic authority, or people’s tendency to trust machine-based interpretations, deferring their own interpretation to the system’s output.

To do so, we examine ‘emotion sensing’ systems that evaluate how one is feeling; understand how machine feedback influences emotional self-judgments, we experimentally compared three system framings: Positive (alert and engaged), Negative (stressed) and Control (no framing) in a mixed-methods study with 64 participants.

Motivated by initial surveys and user interviews, EmotiCal analyzes logs of users’ past moods and mood triggers to generate a 2-day forecast predicting users’ potential future moods. EmotiCal encourages users to change these predictions by recommending enjoyable activities that are personally tailored to the user and connect to higher mood ratings.

A three-week field study with 60+ participants evaluated the effectiveness of EmotiCal against a mood-monitoring only intervention and do-nothing control. Activity recommendations and visualizations for future mood, as prompted by EmotiCal, improved log file mood ratings, pre-post changes in self-awareness and reported frequency and success of engaging activities to improve mood.

In these first designs of EmotiCal, the main focus was on delivering a product that was functional and strove to get consistent entries or participation as possible.

My Role

As a research assistant and lead designer on the project, my roles were to conduct user research, create wireframe mockups/user flows, execute high-fidelity designs, implement the UI, rapid prototype, usability tests and ultimately design a functional experience for the purposes of the research and participants involved. In regards to the actual development of the web app, I implemented the CSS, data modeling, and data visualizations. The app was built with AngularJS, Foundation for Apps, and D3.js. The mockups and prototypes were designed using a mixture of Axure RP Pro, Balsamic, Invision, and Sketch.

We applied human-centered design practices including but not limited to: need-finding interviews, card sorting, hierarchical task analyses, user personas, storyboards, usability testing, paper sketching, and interactive prototypes.

Implementation

Rather than deploying the product as a native iOS or Android application, we instead chose a responsive web app in order to reach the widest user base (Android, iOS, and computer users), it also accommodated our limited development resources and tight schedule. I optimized the UI for mobile, but ensured that it was still functional and visually appealing for laptop/desktop users.

*Note* currently starting design and development on both a native wearable stress app and Android EmotiCal app

Understand

Define, learn, and empathise

So I started out on this design with a hunch that people prefer different means and times of contact — sure I could have poured over the data to find an apparent problem, but I like taking more of an instinctual approach initially.

EmotiCal

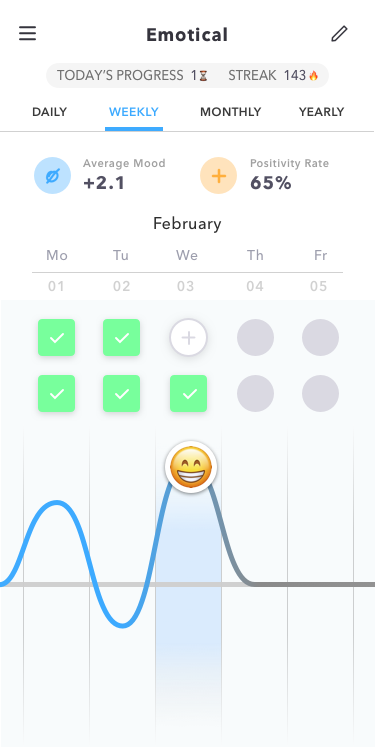

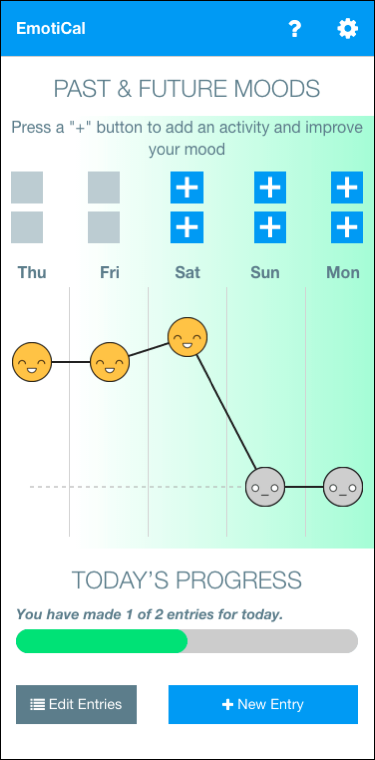

After the initial login screen, a user would then be directed to the app’s home screen. The home screen needed to serve two core purposes:

- Show the user’s emotional forecast

- Provide convenient access to add new entries and edit past entries

EmotiCal’s Home Screen

After discussing and researching academic literature for the best time intervals for self-reflection, I eventually settled on a dashboard view with the 5-day mood “forecast” central in the view (with the ability to toggle length of time), and “+” edit buttons and green edit buttons in the middle of the screen for easy thumb reach on smartphones.

In order to encourage participant completion, I included a SnapChat streak like feature to gamify the entry reporting process. Through later A/B testing, this proved to increase participant daily entry rates by 11.7%.

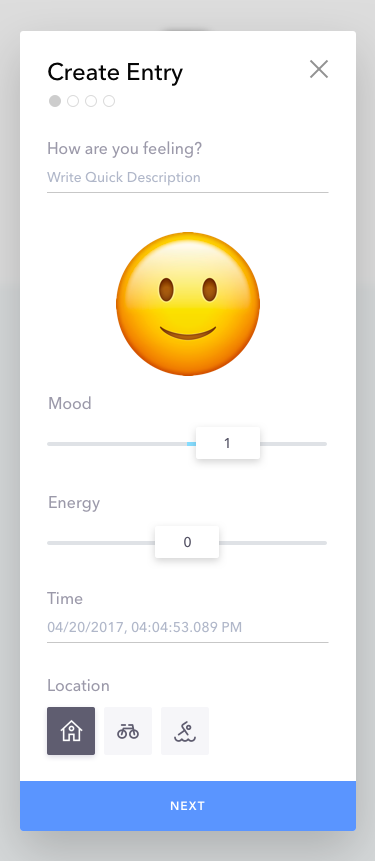

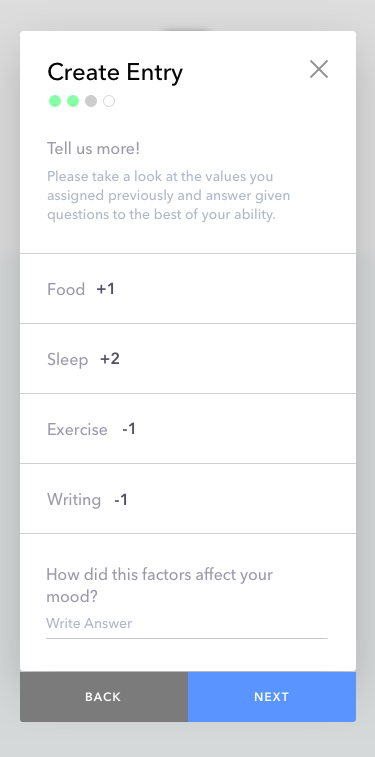

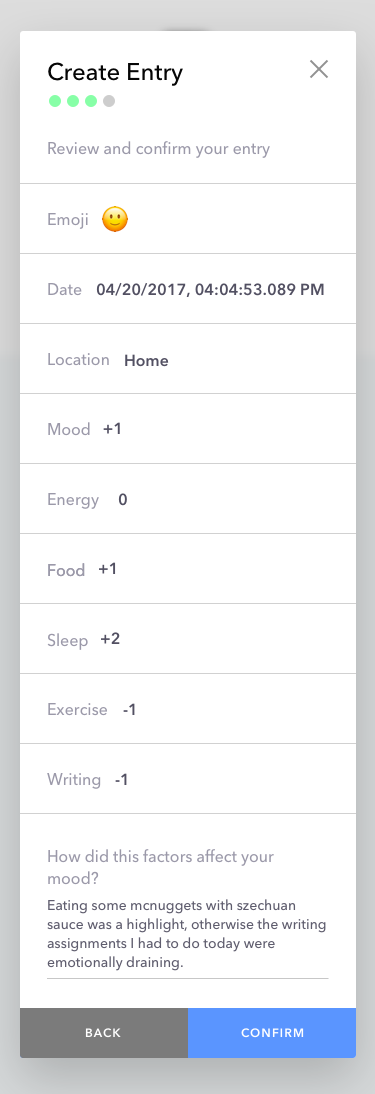

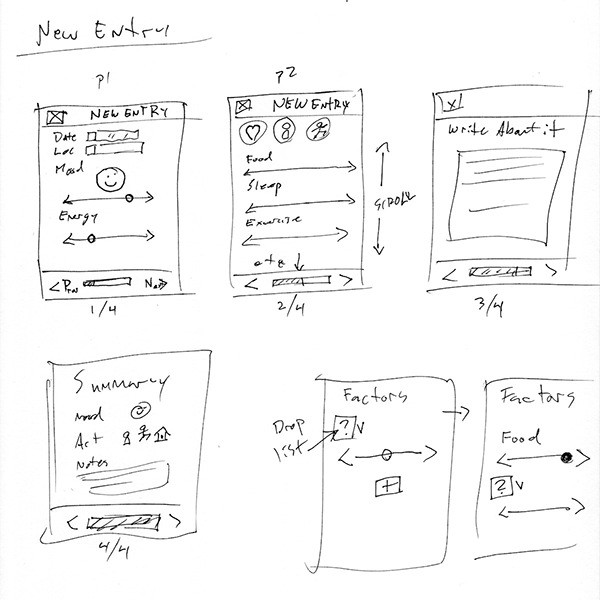

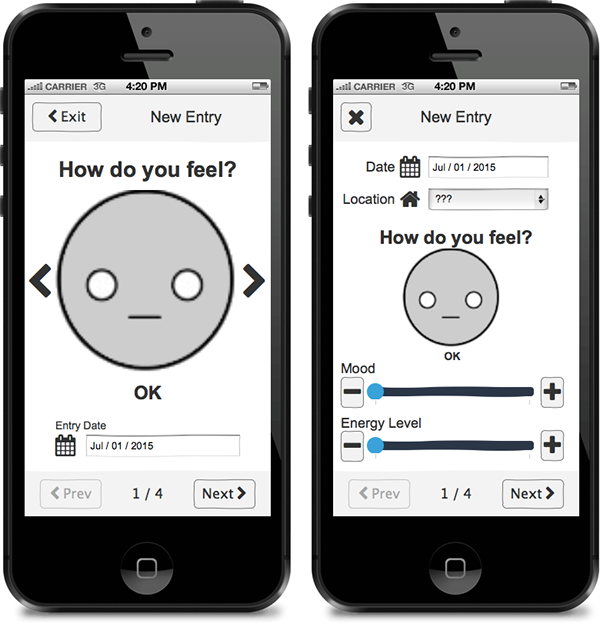

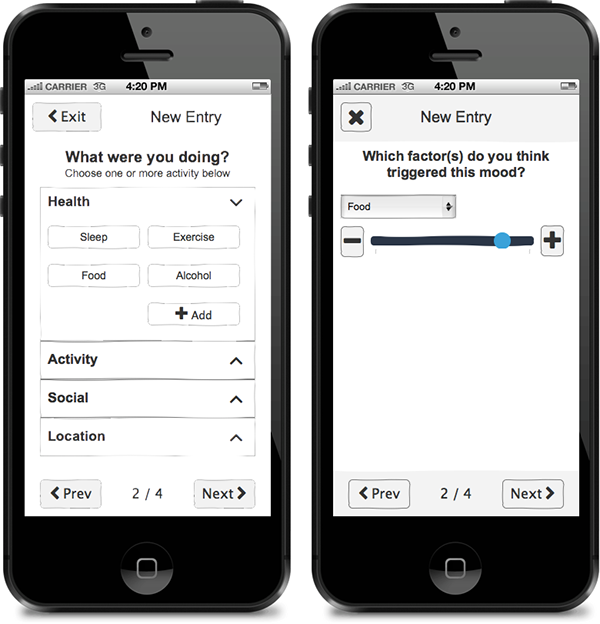

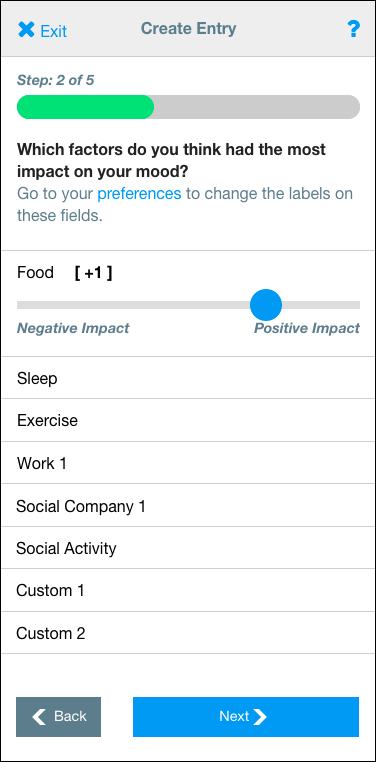

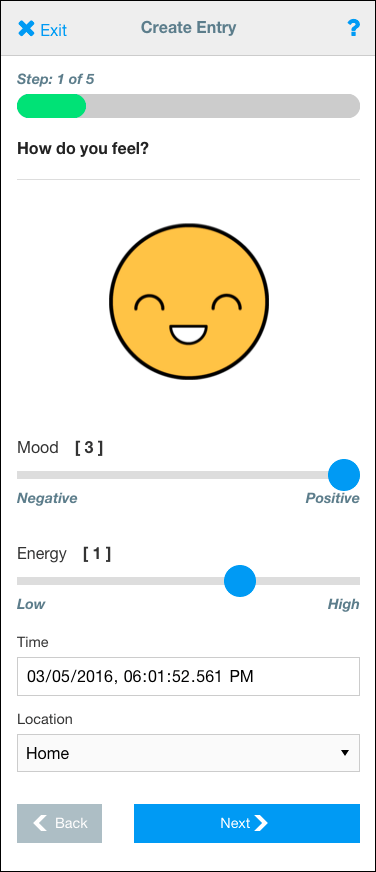

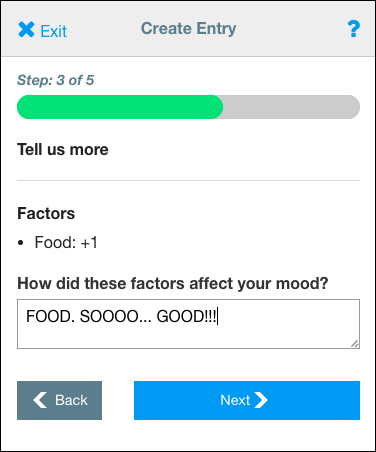

Upon tapping/clicking on the edit or add entry buttons — or even the write entry icon on the top right — a modal pops up providing the user with the 4 step multi-view and single-column entry form.

Write Entry Screen 1

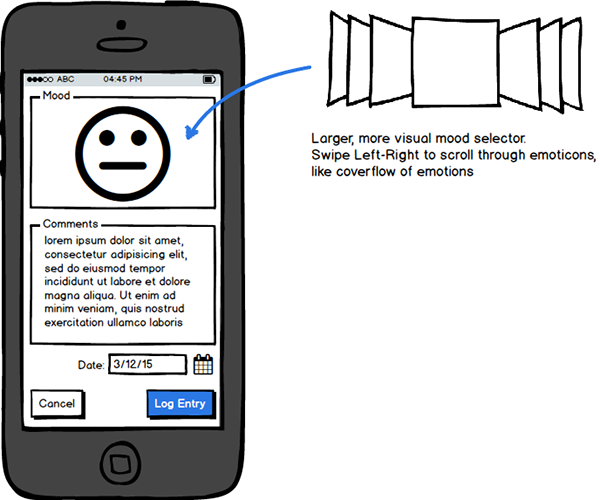

The research study required participants to log entries two times throughout the day, so it was critical to make this process simple, quick, and pleasant. Through user testing, I determined that most people preferred a series of several short entry pages over one long entry form (most likely to due to cognitive overload) and that emoticons or emojis were a favored visual.

I then settled on a design featuring sliders (on a -3 to 3 scale, as needed for emotion algorithmic modeling) large enough to easily operate on a smartphone with labeled points. Depending on the inputs for mood and energy the emoji would reflect the inputted mood value and animated with a variable bounce depending on the energy level. Post-test interviews confirmed this feature was well received.

Note: while the sliders provide a clear visual cue of what it is and how to use it, adjusting the sliders to a specific number can be a difficult task. So I am also thinking about keeping the slider pattern, but also giving users the ability to type in their desired number directly.

“How the emoji would cutely move and change made the entry process fun!”

— Participant #CD340

A mock of a feature that may be built for future studies

While talking to Katherine Isbister — a game and human-computer interaction researcher and designer, currently a professor in computational media at the UCSC—, we came up with possibly letting people select from various emojis to reflect their moods for their entries. The idea being we could qualitatively analyze later what kinds of emojis people use for certain moods.

Write Entry Screen 3

Write Entry Screen 4

The Process

How the sausage gets made

Meetings

I began the design process with a series of meetings consisting of various members involved the research project (Lead Investigator, RA’s, developers, etc.), where we came together to define EmotiCal and its role within the study.

Key Takeaways

- Project Definition (Why we are we doing it?, Who is it for?, What are the KPI’s?)

- Brainstorms (What are some initial ideas we have?)

- Role Alignment (Align understandings and assign roles)

Research

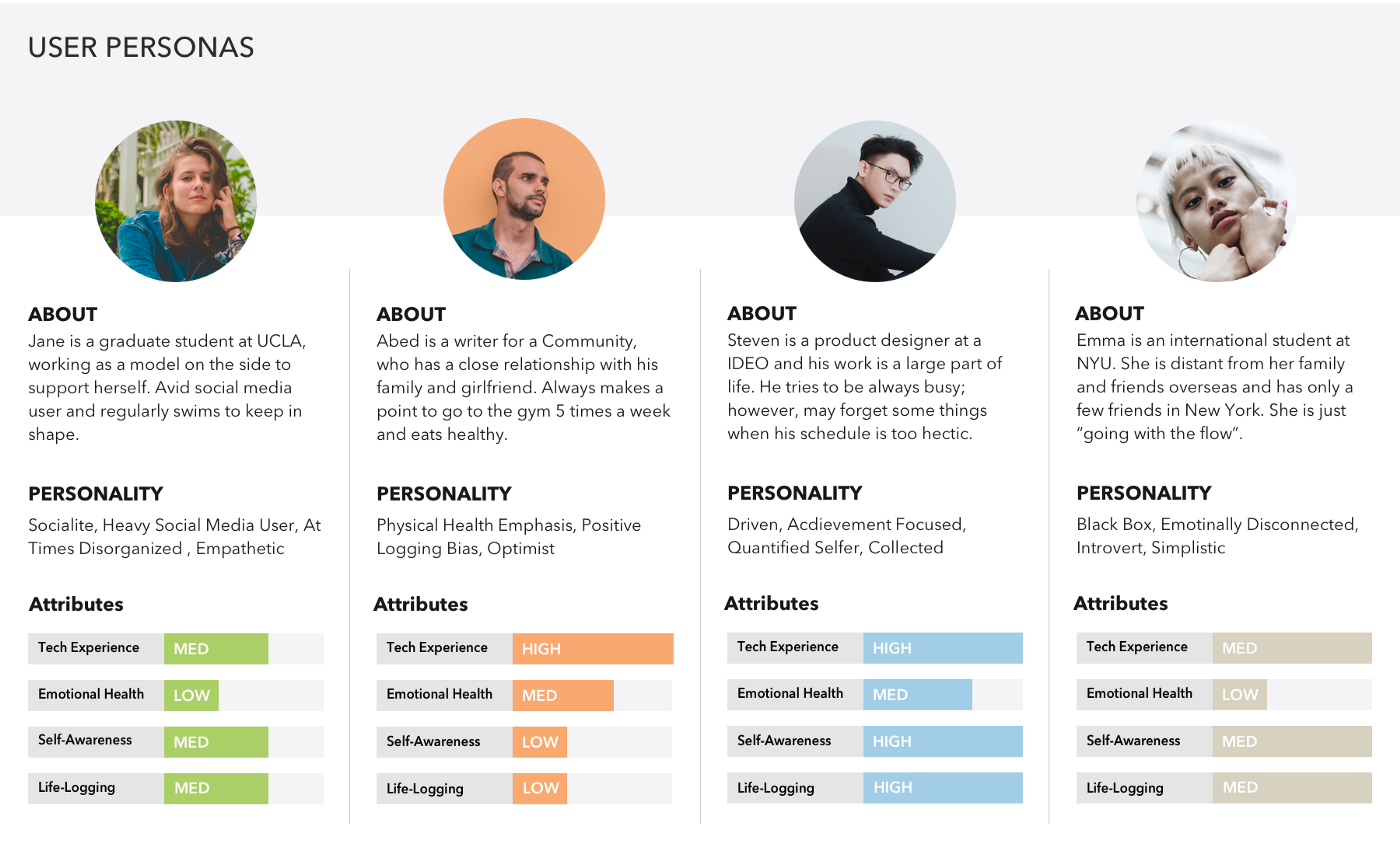

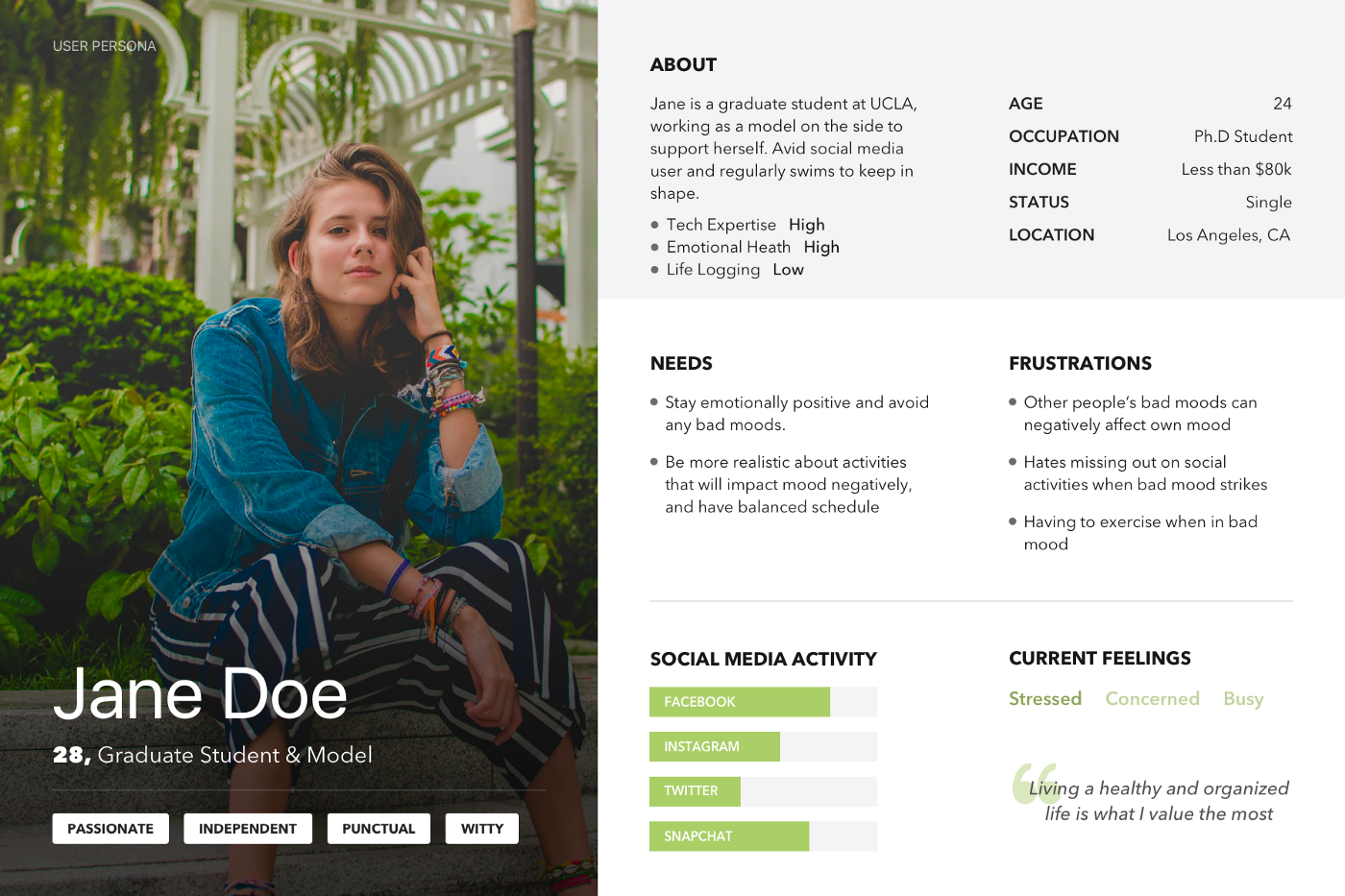

To investigate further into user requirements, we conducted need-finding interviews and then synthesized the interview results to produce storyboards and user personas to guide our design efforts (examples can be found below). I also performed competitive analysis on similar apps — found via python scripts/scrapers — in the App Store and Google Play Store, looking for ways to avoid common usability problems, inform early stages of design, and gather reliable evidence for product changes.

Some developed user personas

User persona

Ideation & Prototyping

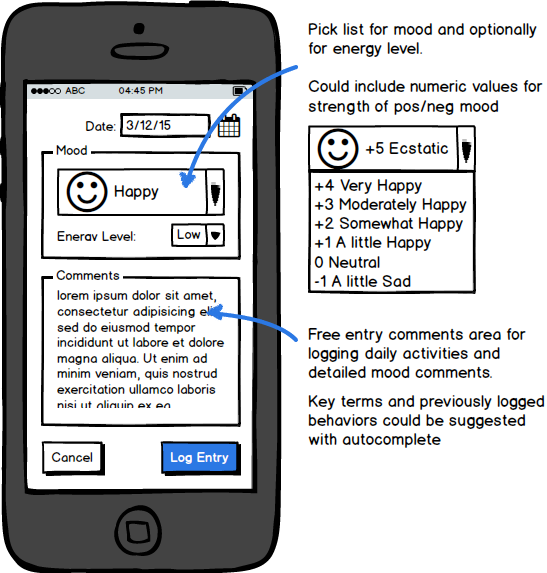

After sifting through the findings, I began sketching out and rapid prototyping developed ideas. This was done using a multitude of tools ranging from Balsamiq, sketch, and Invision.

Early sketches

Low fidelity wireframes created with Cindy T.

Higher fidelity prototypes

Usability Testing

Because of the given time frame, minimal usability testing was performed. However, we were able to iterate on designs using hallway testing, Hotjar heat mapping, and good ol’ manual user contacting/interview piloting.

Conclusion?

Challenges, takeaways, and next steps

As further usage of EmotiCal in studies continue, I will also continue to improve its design. I plan to conduct additional usability tests for both mobile and desktop use to validate some assumptions made. Some definite key points for additional design iterations and focus are:

- Navigations between newly added graphs

- Possible emotional tagging system/ SNS integration

- User flows for configuring preferences

At the HCI Lab, we’re always adding new features and updates, if you have any suggestions please drop me a line at my email [email protected]

My Projects

MarkThat: Voice Powered NotesProduct Design, iXD, Prototyping

Spotify Podcast RedesignProduct Design, iXD, UI Design, Prototyping

Fiori Design System and more @SAPProduct Design, iXD, UI Design, Prototyping

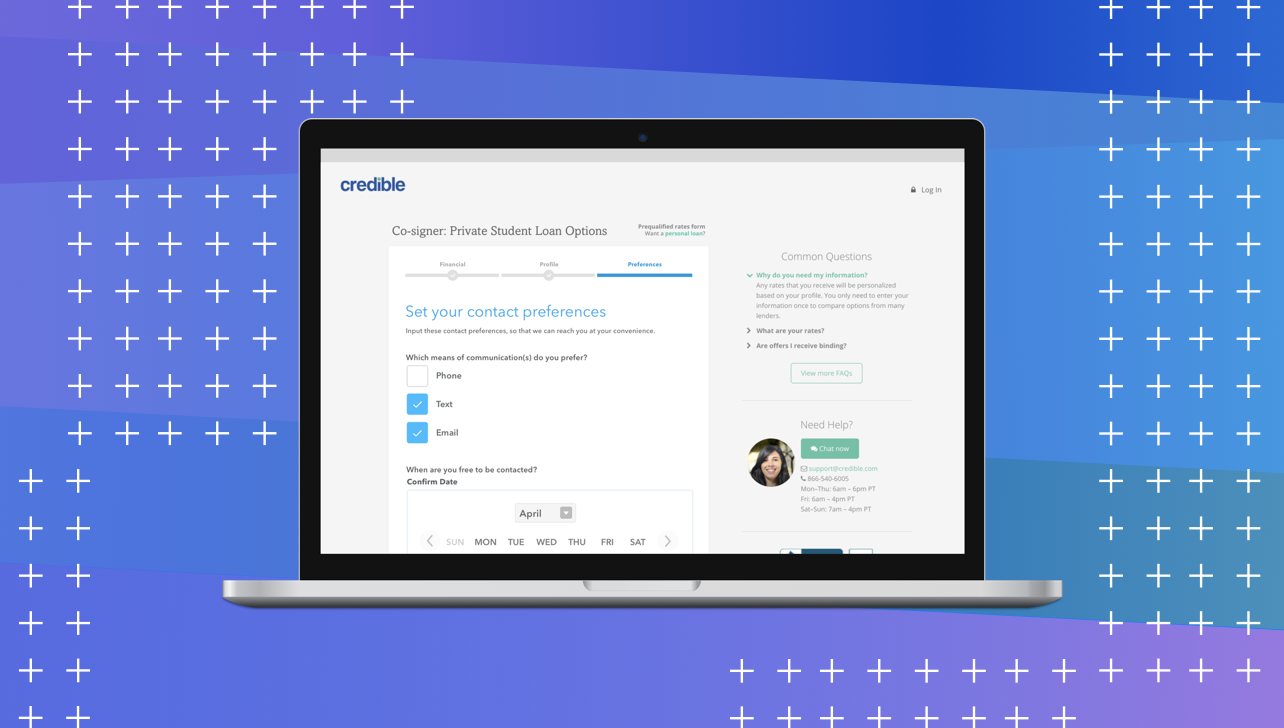

Credible — Contact PreferencesProduct Design, iXD, User Research

UCSC HCI Lab — EmotiCalResearch, UX/UI Design, Development

Voice @Yahoo!VUI Design, UX Research, Prototyping