PERSONAL PROJECT

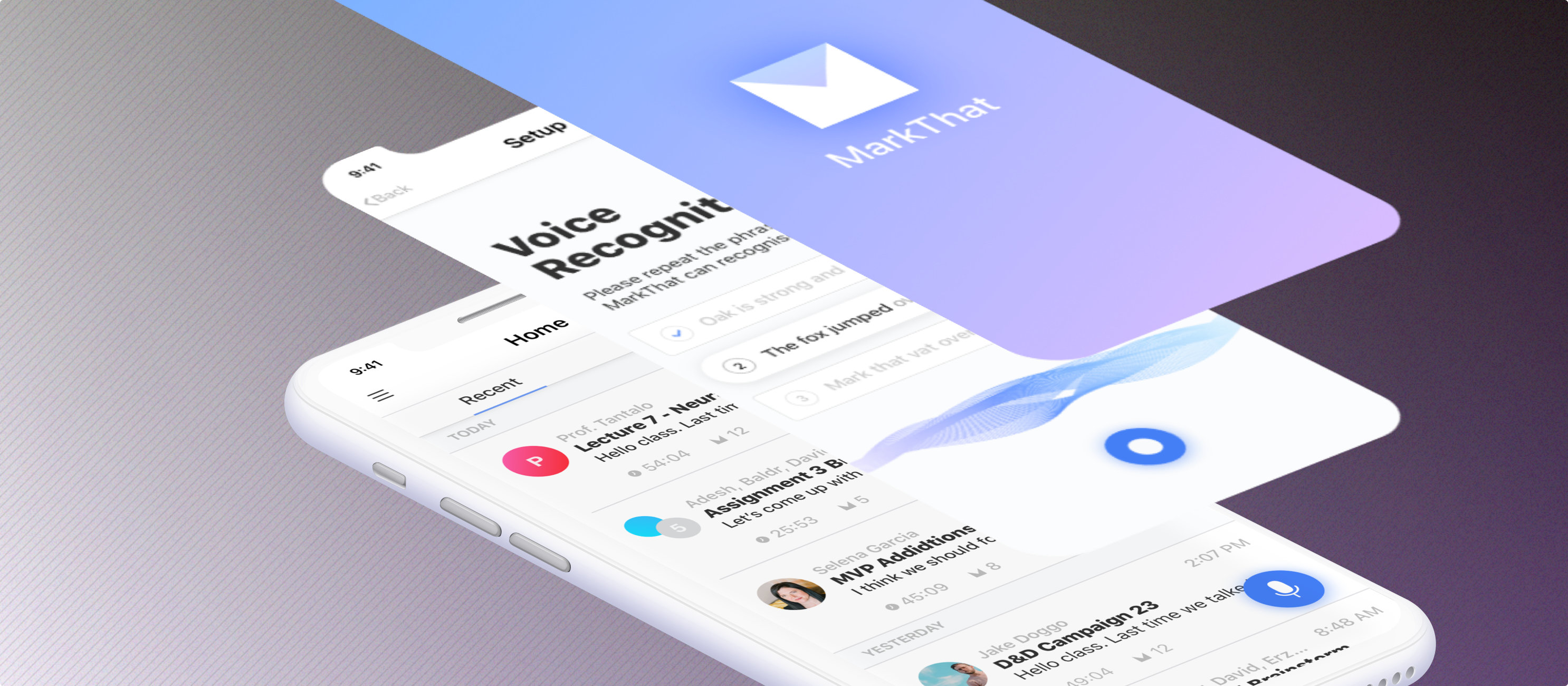

Designing and developing MarkThat: voice-powered notes

Stay in the Conversation

Introduction

Some context and description

This originally started as my mobile application development course's final project. However, with the project not fully realized, I took it upon myself to continue its development as both a design and product exercise.

Designed for iOS, MarkThat is a voice-enabled application that augments how you take notes, so you can better focus on and participate in your conversations. With multi-party transcription, auto-summarization, and audio indexing, MarkThat makes it easy to take, find, and share your notes.

Read more about the earlier version of MarkThat here!

April - Jul 2019

User Research, Product Design, Prototyping, User Testing

Sketch, Adobe Illustrator, Invision, Origami

Overview

Note-taking: good, bad, and ugly

The Problem

How can people be better engaged during conversations while still taking effective notes? Not everyone is a bad note-taker; however, those who use conventional/linear note-taking methods (like copying down info verbatim) or just forego note-taking in general, fail to properly leverage their notes or lack thereof and can miss out on recording important information and proper active learning.

In our private lives, the workplace, and even the classroom, we spend a startlingly large amount of time communicating with others. Studies show that 70% of your waking day is spent on communication, and about 75% of that time is spent speaking or listening.

Yet most of the words we hear each day are either ignored, forgotten, or misunderstood. While email and messaging leave inherent digital records of the ideas and information being conveyed, phone calls, video conferencing, and face-to-face meetings don't allow people to retain as much of that information.

There are 55 million meetings every day in the U.S. But 90% of the information being conveyed in those meetings is forgotten by meeting participants within a week. If you or someone isn’t taking excellent notes, chances are you’re missing something.

Relying on memory, which is famously inaccurate, is not enough. And more’s the pity: studies show that more and better ideas are produced in face-to-face meetings, and that in-person interactions bring a host of other advantages as well. Given the fact that many of these interactions take place on the fly — at the water cooler, in the elevator, during group discussions, on the way to or from another meeting — odds are you won’t have access to any notes or records, and that much of the value of those encounters is being lost.

My last two brain cells as I struggle to take notes on information I don't even comprehend

The Solution

A voice-first audio recorder and note-taking application that offers time-stamped indexing and automatic transcription, MarkThat is designed to understand and capture both short and long-form conversations that take place between multiple people. MarkThat leverages machine learning and voice technology to allow users to better focus on their conversation or meeting by augmenting note-taking and sharing responsibilities.

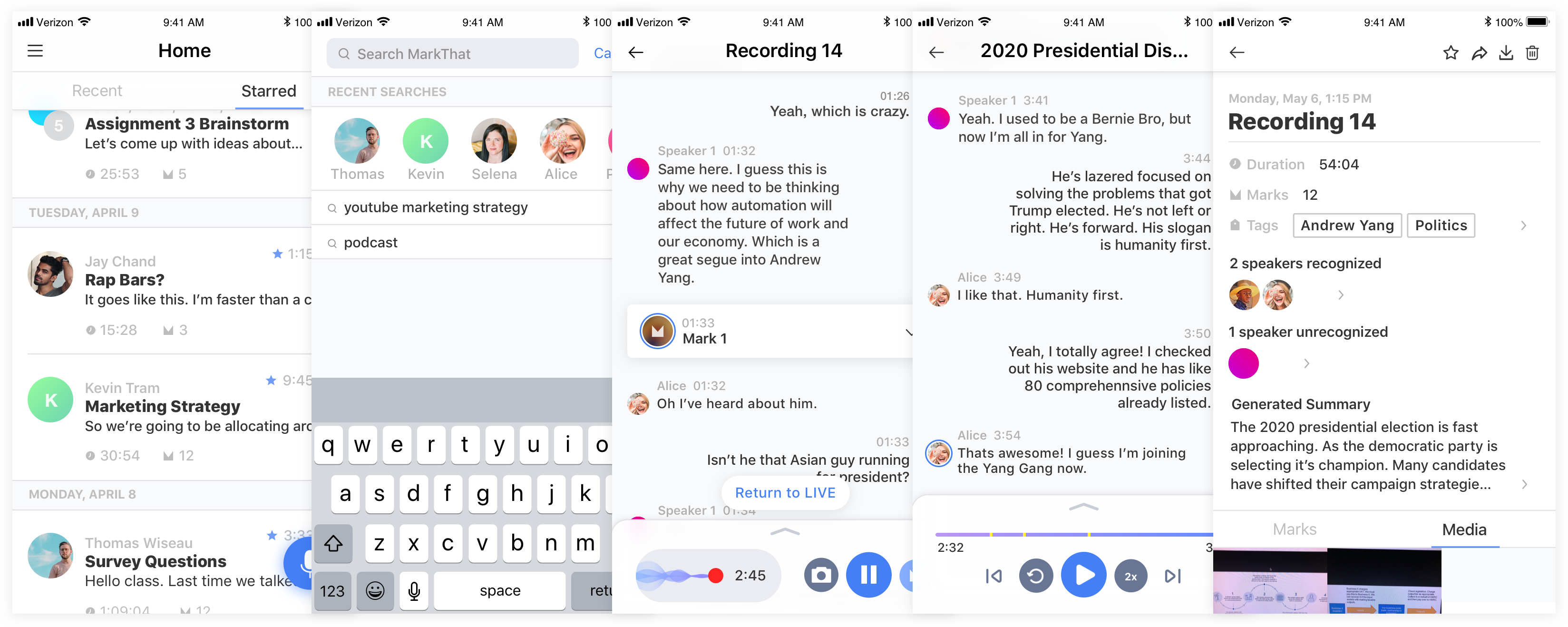

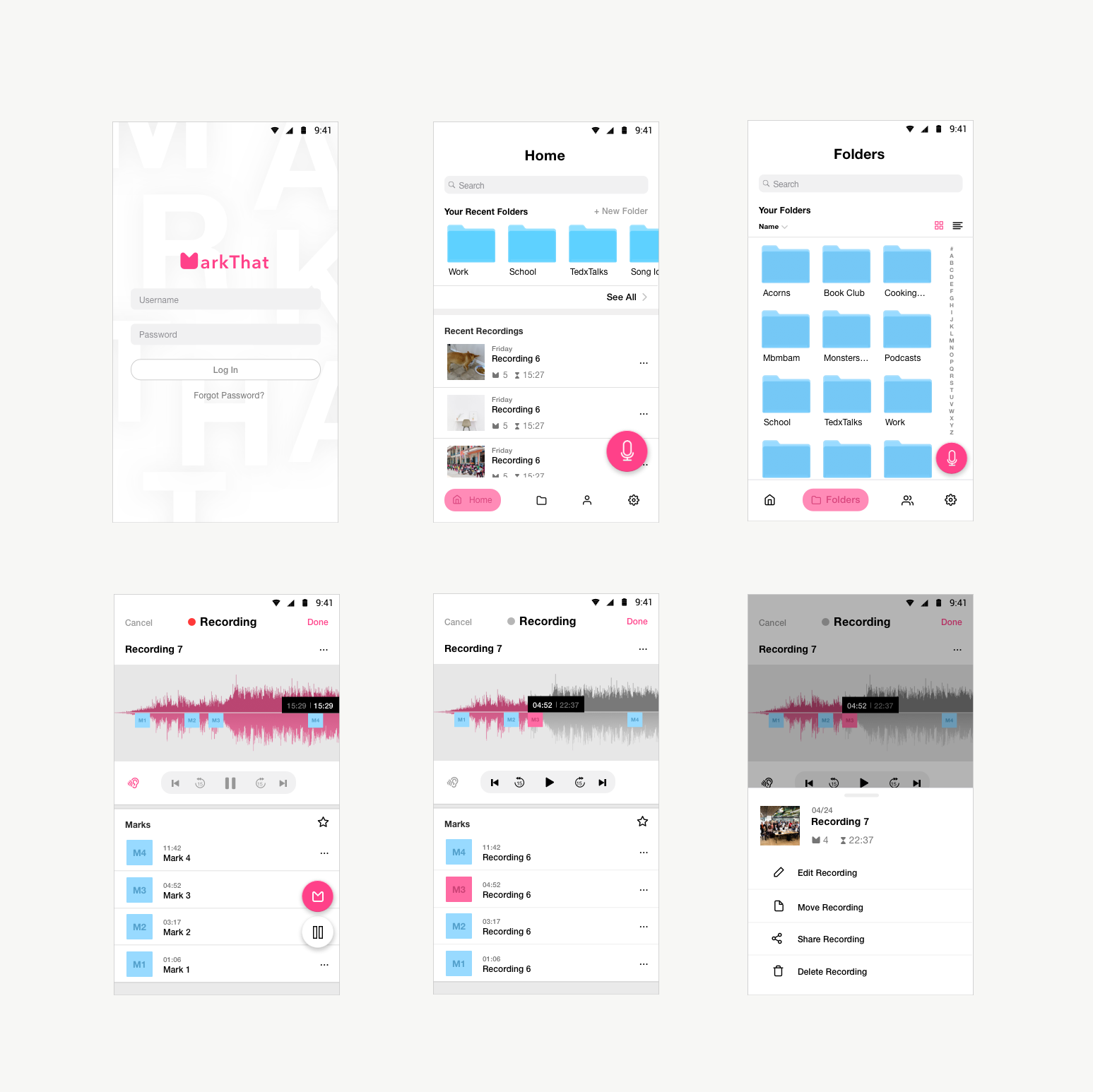

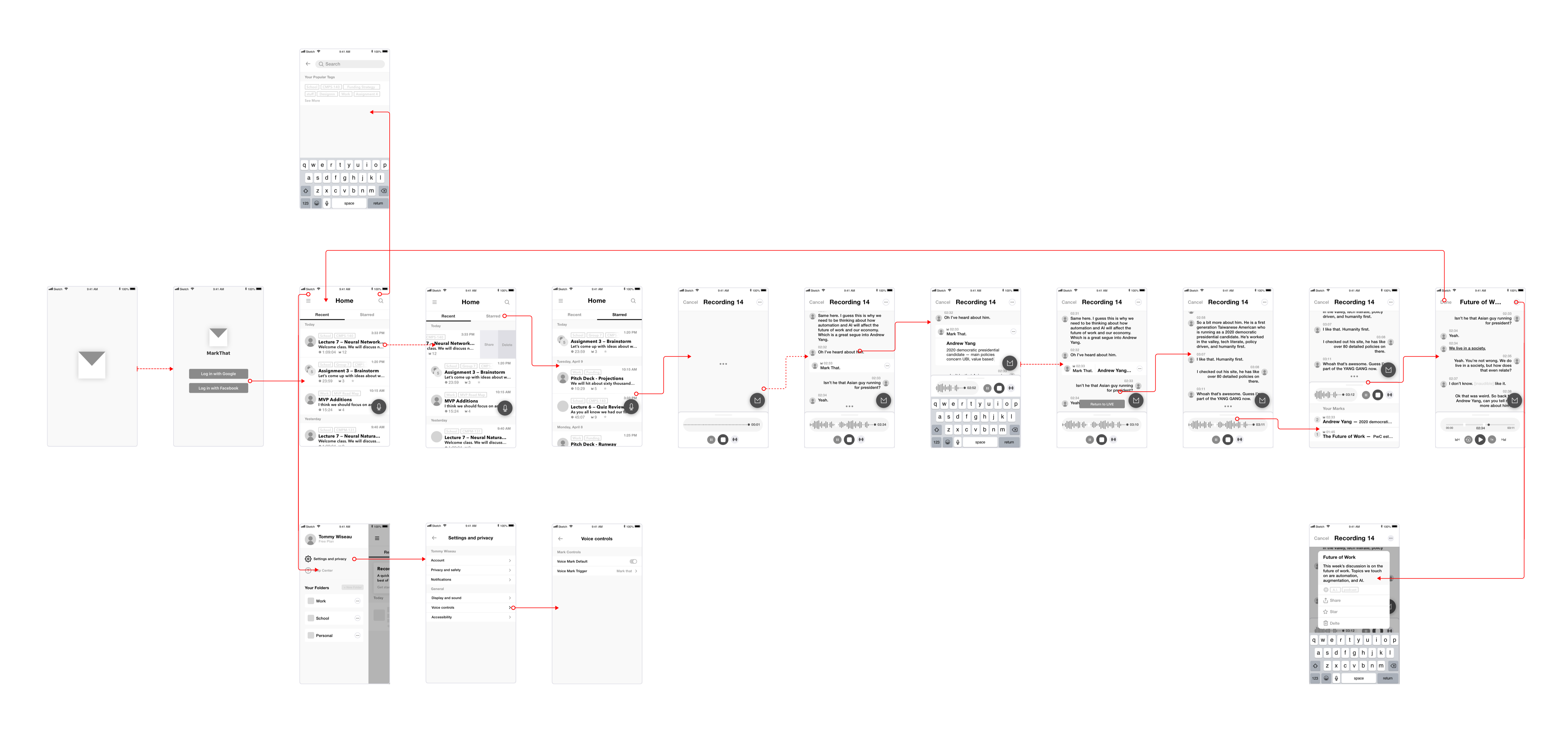

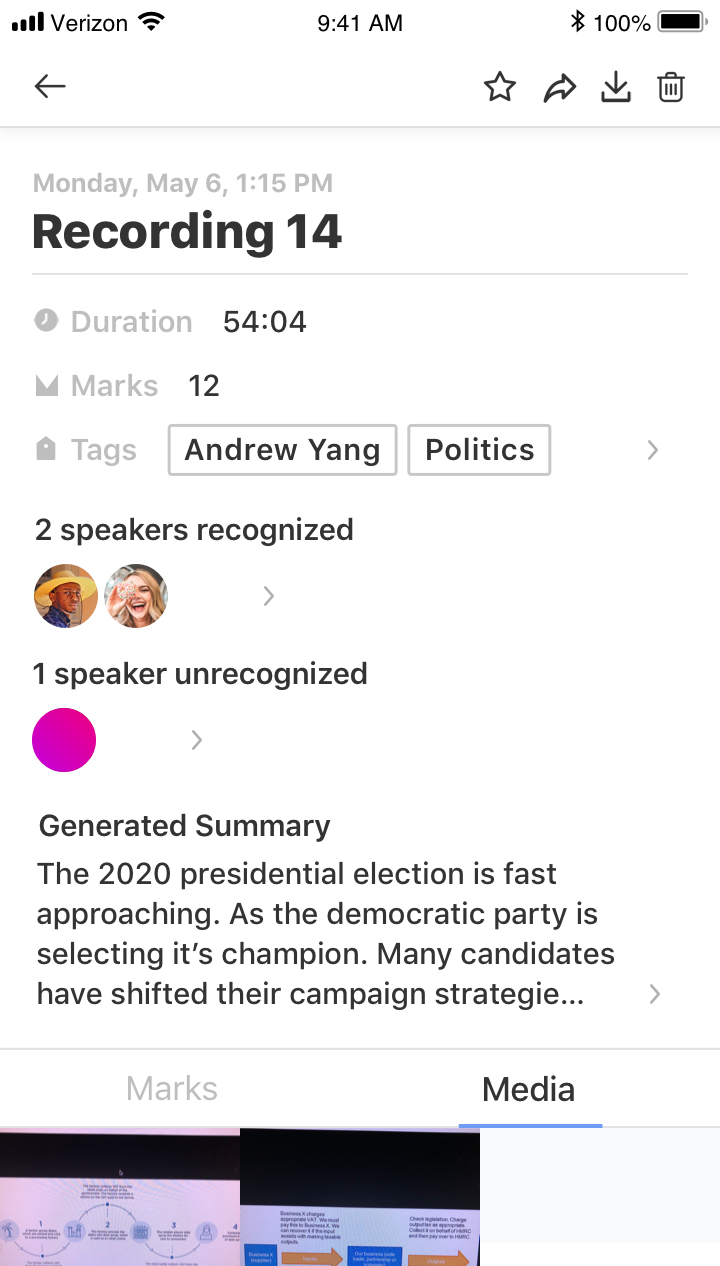

Some of the screens from final design

With MarkThat, you will never forget what was said in a meeting, on a phone call, in a classroom, or in any other context where talking is key. Use MarkThat to record what you need, so that you never have to lose a thought or an idea again.

My Process

Improvise. Adapt. Overcome.

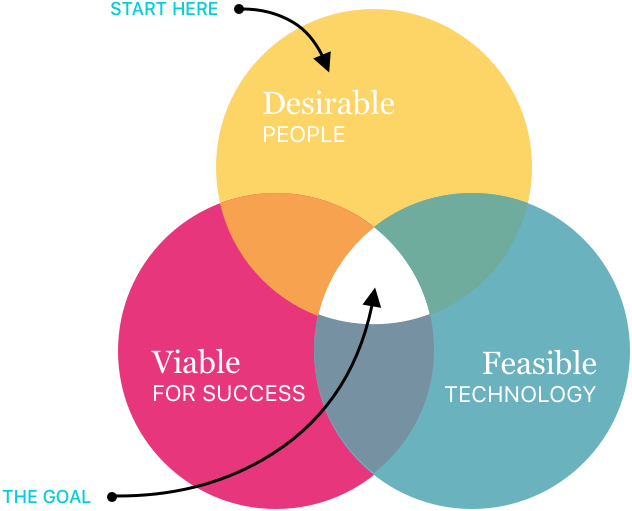

I loosely approach product design and development with a combination of IDEO's human-centered design thinking and Lean Startup methods.

Objectives

- Understand the needs of the people and market

- Generate possible designs with consideration for applicable technology

- Evaluate for success with tuning or pivoting

Methods + Techniques

Understand

- Literature Review

- Surveys

- Interviews

- Domain Research

- Personas

Generate

- Brainstorms

- Sketches

- Wireframes

- Hi-Fi Mockups

- Prototypes

- Development In Progress

Evaluate

- Usability Testing

- UX Evaluation

- UX Iterations In Progress

Understand

Define, learn, and empathise

Following an initial hunch with a broad target user group (college students) and problem space (note-taking), I knew I had to lead with generative research to fully find, narrow, understand, and define any opportunity for solutions and innovation in regards to the below challenge statement.

Initial challenge statement: create an app that simplifies note-taking and improves note usability.

But before I dove into the research, I declared these high-level goals:

- Understand the various note-taking methods and pain points + their respective contexts

- Narrow and define the target user group.

- Collect data from real users to quantitatively validate my findings.

- Interview users to find and understand nuances and mental models.

- Construct fleshed out problem statements and personas for later design reference and success baseline.

Focusing my generative findings into a concrete declaration, I outlined prominent problem statements and concerns to address them.

Some key points and possible areas of concern I found were:

- Fear of information loss: students can easily be too absorbed into the act of note-taking for fear of missing key information to the point of near-verbatim notes (more common in typed notes) preventing real active learning or participation. Also, a mismatch of speaking and recording pace also contributes to frantic and incomplete note-taking.

- Difficulty of the navigation and locating of information: Poorly organized notes (especially those written in long-form) can be difficult to later find or to parse through for specific information.

- Accessibility: Physical notes require constant possession for immediate access. Long-form notes are hard to share, low res pictures or joint custody are major issues.

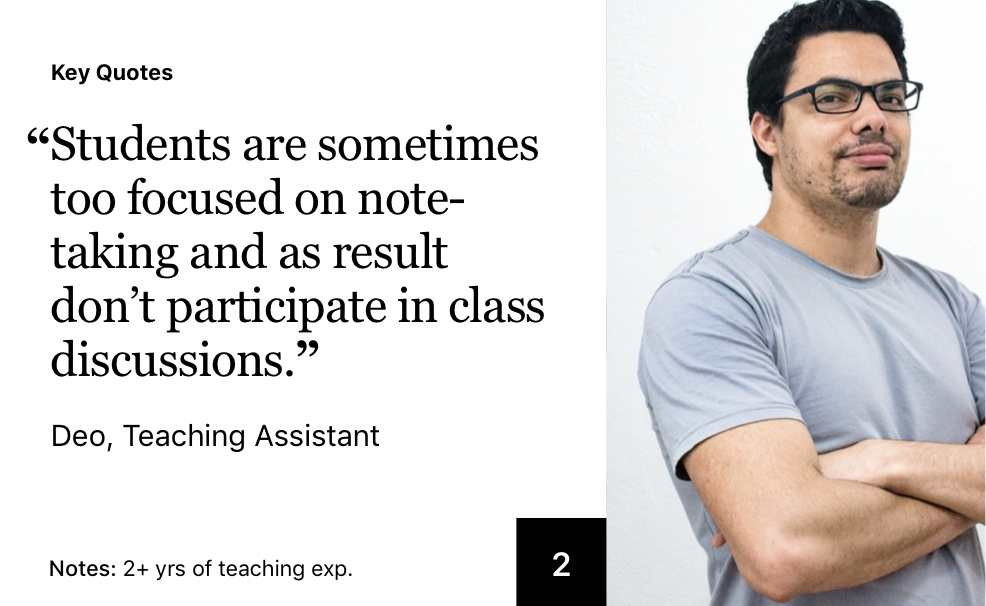

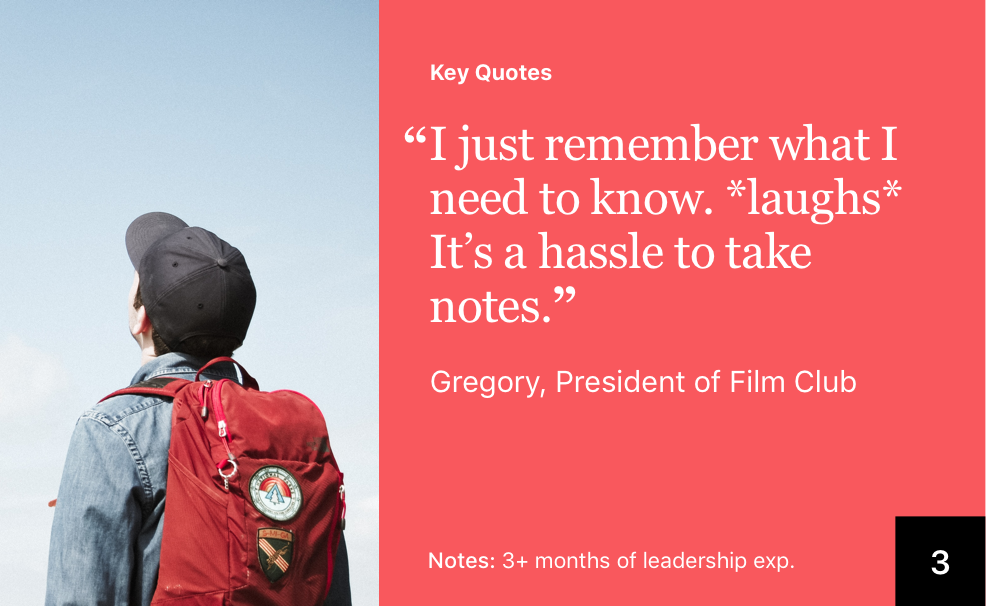

Short Interviews

To both avoid any personal bias and narrow the scope of my research, I decided to first consult/interview common college student note-taking stakeholders— college students, TAs, etc. I conducted 5 short interviews with people who fit into my stakeholder group to quickly get a broader understanding of the domain and identify any surface-level problems.

Surface-level problems

- Information Gap and Alteration Note-taking doesn't guarantee the good capture of information. Notes can be faulty because they are biased by the particular interpretation that the person chooses to record down. When notes are a substitute for being there, this can result in poor outcomes for teams.

- Loss of Attention and Comprehension Students have different note-taking habits and methods. If done poorly, students can lower their cognitive engagement and weaken their memory, all the while diverting the necessary mental faculties to unhelpful tasks.

- High Information Off-load Conversations or meetings can be informationally dense, making it difficult for people to digest, retain, and navigate the material in one sitting.

A selection of key quotes I found to be insightful

Literature Review

Using abstraction laddering, I was able to translate the surface-level problems into more abstract, yet core-level problems. With that, I knew to constraint my efforts to focus on researching 'poor and biased information capture', 'ineffective note-taking methods', and 'difficult information retrieval and navigation' instead of starting with no direction during the literature review.

Key questions & related findings

- Why do people take notes?

Generally, it's so that people can maintain a permanent record for future reference and learn in an interactive way. (other cases will be brought up later) - Is note-taking useful? Specifically, does the taking of notes aid people in recalling the original conversation?

It seems that academic literature points to a mixture of conflicting claims. Some studies concluded that note-taking was not beneficial to students, while others found that note-taking did lead to better recall of material when compared to non-note-takers. However, most researchers can agree that other factors have a great impact the effectiveness of note-taking, such as the reviewing of the notes, the timing of a review and the length of the retention interval, and the type of audio situation and presentation.

Other interesting findings

- The process of note-taking can interfere with memory — though done with the intention of aiding recall, the sensory-motor task of note-taking will occupy part of the primary memory and interfere with incoming activity traces.

- Factors that influence note-taking: pacing (which includes both the speed of delivery and the amount and difficulty of information delivered) and cueing (which involves verbal and visual signals of emphasis, structure, and relationships.

- Many studies of notetaking find that review of notes (one’s own or borrowed notes) significantly improves recall of material.

- More meaningful learning and creative thinking occurs during interactions like discussions and meetings.

- Note-taking decreases the amount of interaction (same vice versa).

Which lead me to

- Focus on the information distribution and disruption aspect of meetings, specifically note-taking

- Hone in on students who either irregularly take notes, don't review their notes, or don't take notes at all and understand why

- Conduct a survey to quantitatively verify the market and secondary research

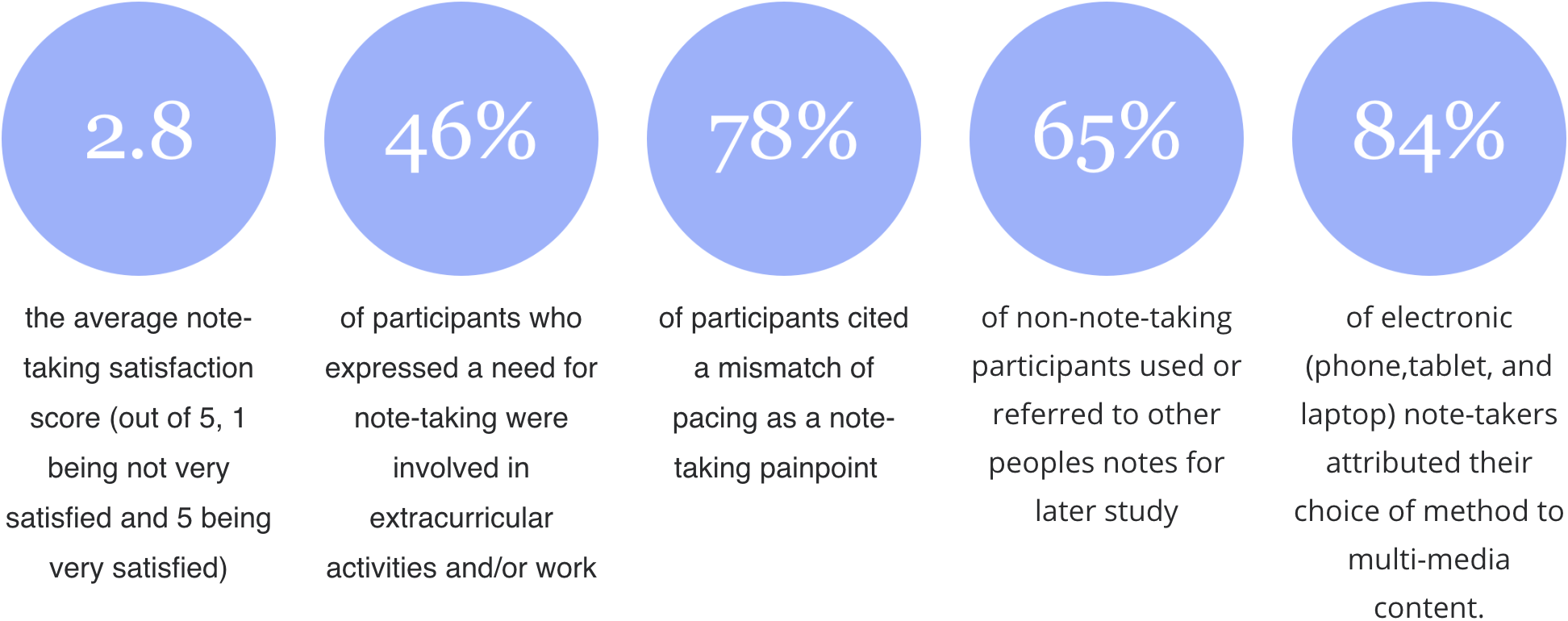

Surveys

Using google forms and sheets, I reached out to students on various facebook groups and my own network. The survey served to 1) further narrow my target user group by other possible common identifiers and 2) help me validate and learn more about general problems, satisfaction levels, and strategies that they have in regards to their current note-taking methods, or lack thereof

Some of the Data Collected 33 total participants

Interviews

I then conducted a series of semi-structured interviews (10) with my now identified target users (busy students who take linear and conventional notes + non-notetakers) to 1) discover and/or understand any nuances with note-taking paint points and 2) further empathize with real user stories and interactions to form accurate use cases.

Some of the questions asked to understand note-taking situation & their effects on note-taking strategies

- What do you take notes of?

- When would you not take notes?

- How often do you refer back to your notes?

- Do you ask for other people's notes? if so how often?

- Do you share your notes?

- Do you ever rewrite your notes?

- Roughly what percentage of time do you spend note-taking in your meetings, conversations, consultations, lectures?

Some key findings from the interviews were that

- People cited poor or slow handwriting for why they chose to take notes electronically or not at all.

- The rewriting of notes is task-dependent, notes for private use tend to be unlikely rewritten while notes for documentation are more likely to be rewritten.

- People were unable to read their co-workers handwriting or notes were not detailed enough.

- The frequency of note reference depends on the task behind the creation of the notes.

- The amount of time that a person uses to note-take depends on the individual. Their role, tasks, and situations inform the effort spent.

- Overconfidence in memory and not wanting to put the effort to note-take can lead to miscommunication, regret, and wasted time.

- People listed both tasks and situations as reasons for not taking notes.

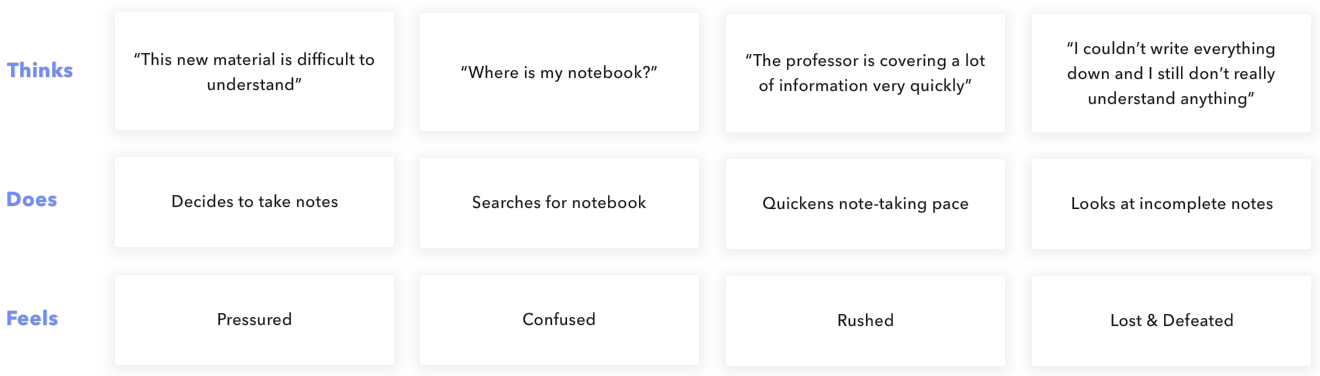

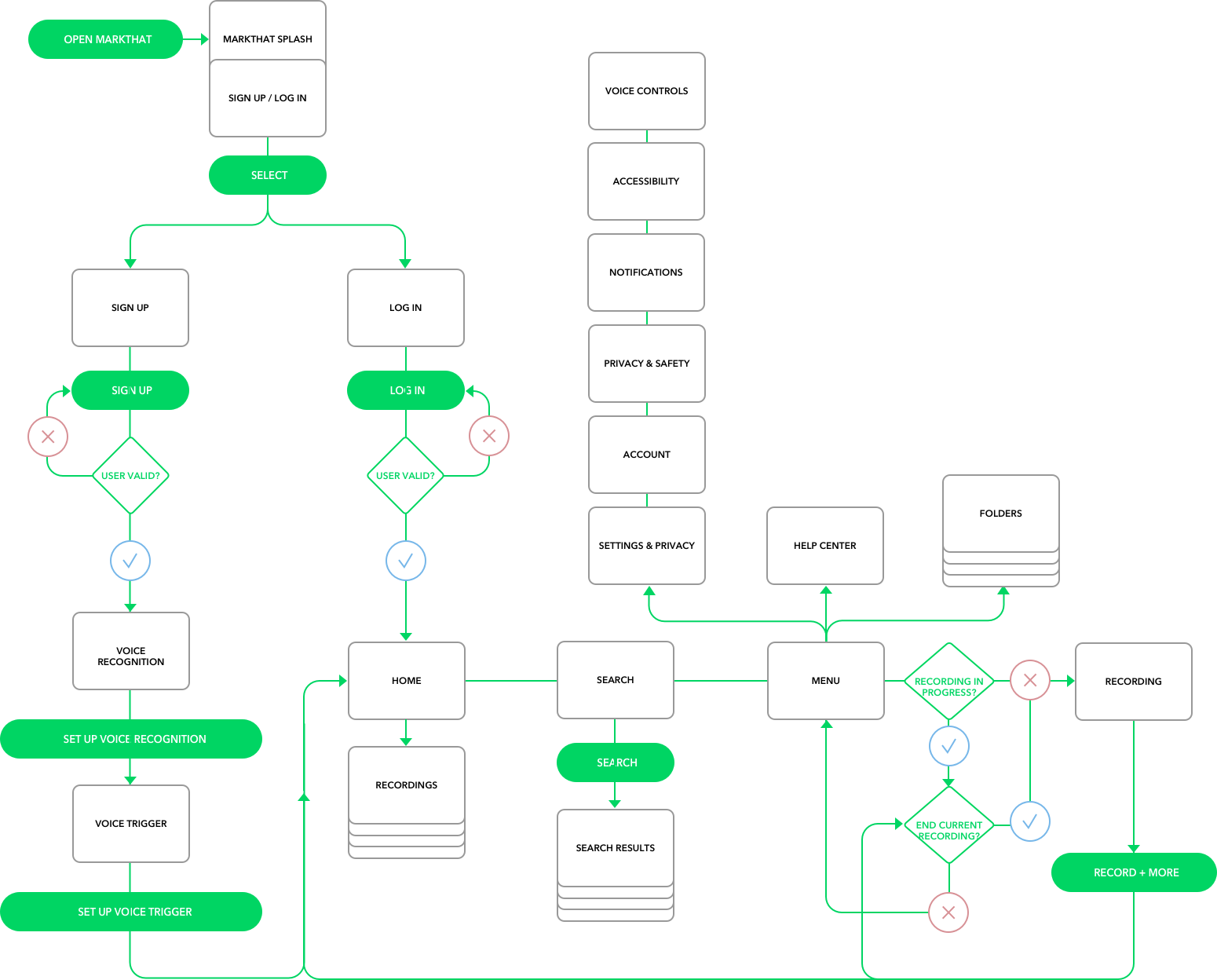

Task Flows

Synthesized a task flow from an interviewee's description to better visualize situation.

User Pain Points

Using the above findings from the research, I condensed the following core problems that I wanted my product to solve.

- Poor notes because of having to participate in the discussion - There are some occasions where (a) minutes are not being taken in a meeting or consultation and (b) there is a need to participate in the discussion. This means that adequate noes cannot be taken.

- Inadequate notes when it is not socially acceptable to take notes - There are occasions and environments when a person may feel awkward taking notes such as in the case of speaking with an upset/emotional individual or about a sensitive subject where engaged attention is demanded.

- Poor notes because meeting moving too fast - Sometimes points are missed because the meeting or consultation is moving too rapidly to be able to write down all that is necessary.

- Nonlegible writing - Horrible handwriting exists that cannot be read by others or even by the writer themself.

- Lack of verbatim record - In some situations, a verbatim record is necessary such as in consultations to keep an exact record of what each person said so that no one can argue that they or the other person said something that they did not. Also, a complete record provides an unbiased source of information that doesn't dismiss anything.

- Notes are lost or altered - As a backup in situations where notes are misplaced or there is uncertainty over whether the notes may have been altered by someone else, people need to be able to confirm the validity of the notes.

- Problems with efficient retrieval and storage - More pertaining to long-form notes, people need an accessible and centralized source to

- No dates, times, or indices in notes - For people who forget to date their notes, automatic time stamping would solve this problem. In addition, a link between people's notes and an in-built diary using the timestamps would help in the retrieval of notes.

- Poorly organized notes - In many note-taking situations there is little time to structure notes as they are written, so a system that allows for editing and rewriting to aid with making notes better formatted and understandable.

- Difficulties in reviewing the keypoints - It seems that when people review their notes they quite often just want to see the most important points or actions. As people usually mark these keypoints (e.g. using asterisks or highlighting) whilst making their notes it would be useful if they could be written automatically into the summary/action boxes for easy review.

Design Goals

Based off of my takeaways on user needs/pain points and the gaps in current solutions, I created design goals to better solidify and align my most core values.

Boost Conversation Engagement

User Goal — Focus and contribute to the conversation without multi-tasking or being preoccupied

Design Implications — Provide an unintrusive or passive solution to record information

Accessible and Prevent Loss of Information

User Goal — Quickly and easily record all the information from their conversation

Design Implications — Allow users to capture all the necessary information from their conversation

Improving Content Digestion & Navigation

User Goal — Quickly locate and digest meeting content.

Design Implications — Help users efficiently find important information and traverse the meeting.

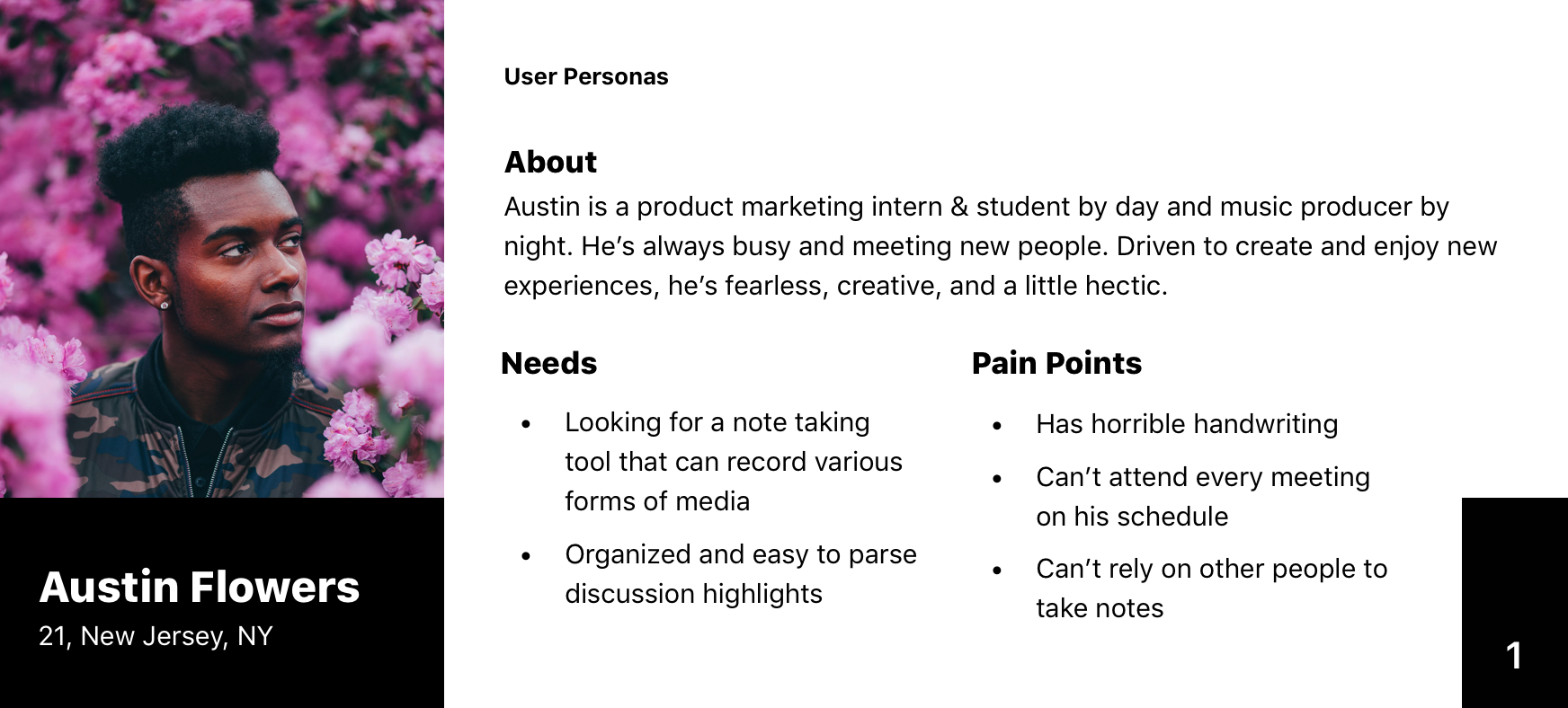

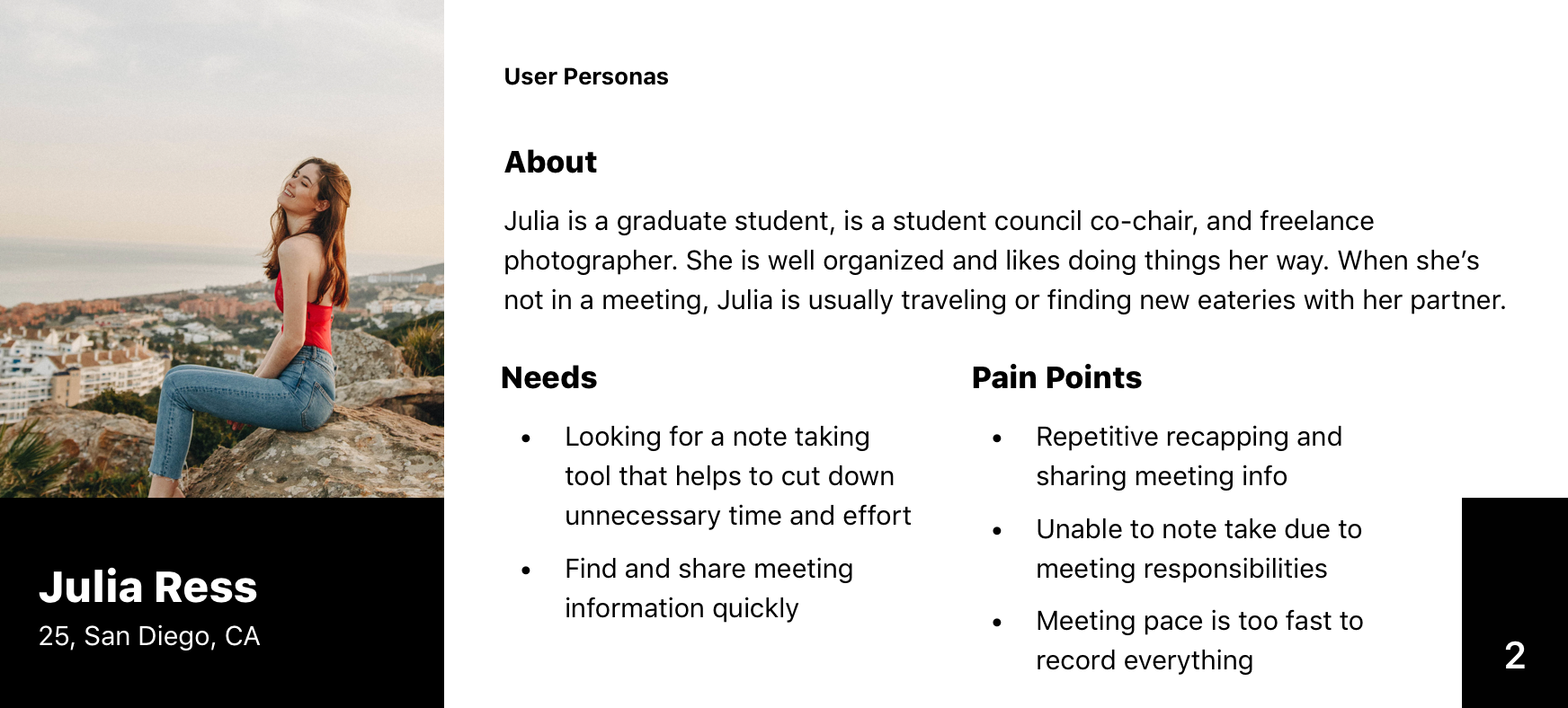

Personas

To establish empathetic target users, I developed personas based on the research done. Each persona provided contextual information, needs, and user pain points.

Competitive Analysis

To find both inspirations and gaps in existing products, I looked at a couple of solutions that survey participants and interviewees mentioned.

Google Docs

Pros

- Provides real-time collaboration

- Has good file accessibility/compatibility

- Allows quick info navigation using table of contents feature

- Has various formating and editing features

Cons

- Requires internet connection (to a. degree)

- Encourages multitasking activities

Voice Memos

Pros

- Has a simple and efficient interface

- Encourages conversation engagement

- Can be used without internet connection

- Records everything, no information loss

Cons

- Has limited note-taking functionality

- Has little to none organizational and search features

Evernote

Pros

- Has a variety of note-taking options (pictures, voice recordings, file attachments, etc.)

- Has good accessibility

- Is user-friendly

Cons

- Requires internet connection (no offline mode unless using Biz., Prem., or Plus)

- Has a bad tagging system and cross-linking functionality

Only Evernote really came close to addressing my identified issues with engagement and sharing; however, it still lacked key functionality and focus.

Generate & Evaluate

Ideate, visualize, and iterate

With design goals in mind, I could finally start ideating and developing possible ideas that could address said goals - which I framed within the context of main actions regarding notes below.

Taking Notes

- Promote conversation engagement and participation

- Improve the quality of notes

Referencing Notes

- Improve orgnization

- Speed up information search

- Simplify content digestion

Sharing Notes

- Streamline sharing

- Simplify content location and digestion

Referencing the design goals and findings, I brainstormed and sketched any solution that could fit my outlined design goals. My ideas ranged from personalized voice agents to note-taking gamification; however, one stuck out as feasible (for a junior developer), appropriate (for addressing design goals), and familiar (uses the concept from Mark That v1 and Voice Memos).

Return to Initial Concept

I was drawn back to v1 of MarkThat, a product idea that could address the design goals I had established with some further refining.

MarkThat v1 allowed users to take audio recordings like Apple's Voice Memo application, place time-stamped indexes, organize recording in a folder system, search recordings by title or description.

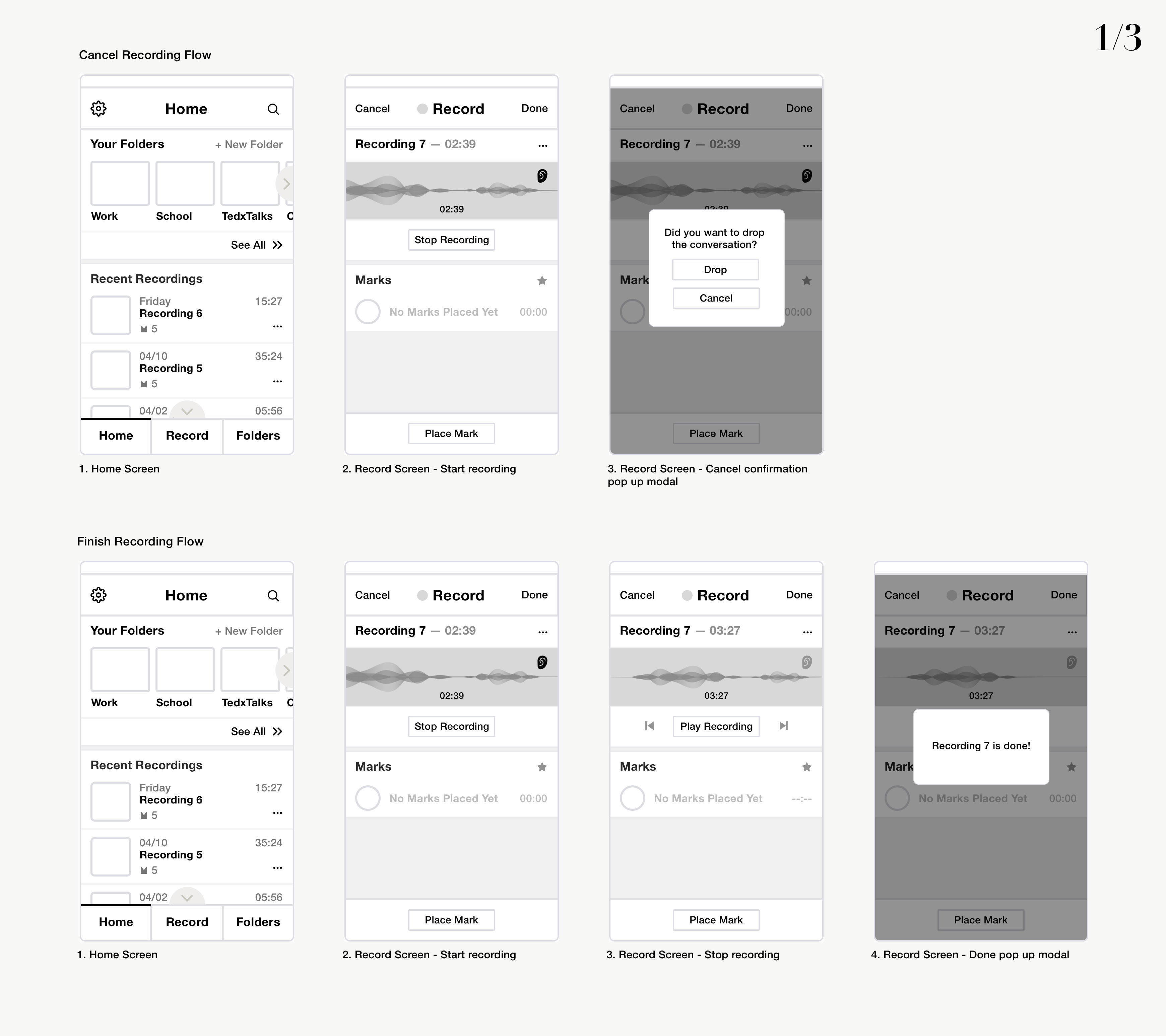

Some mockups for v1 that I made

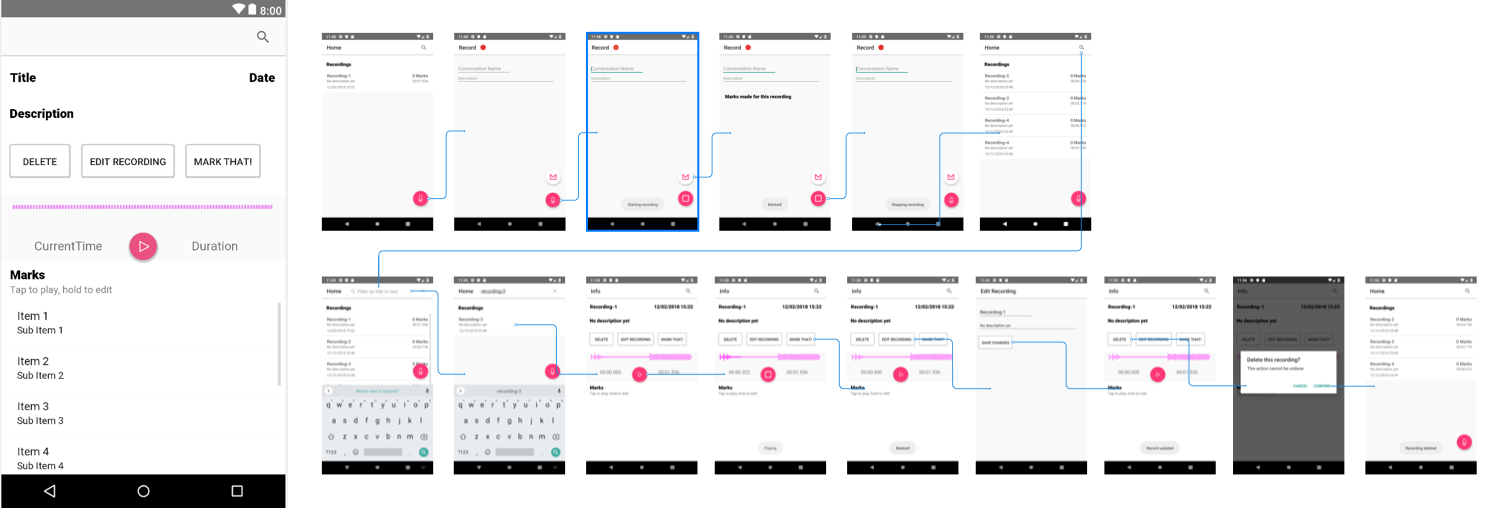

I did some user testing with a simple but working, android app that my friends and I developed off of the v1 specs, running a total of 2 usability tests.

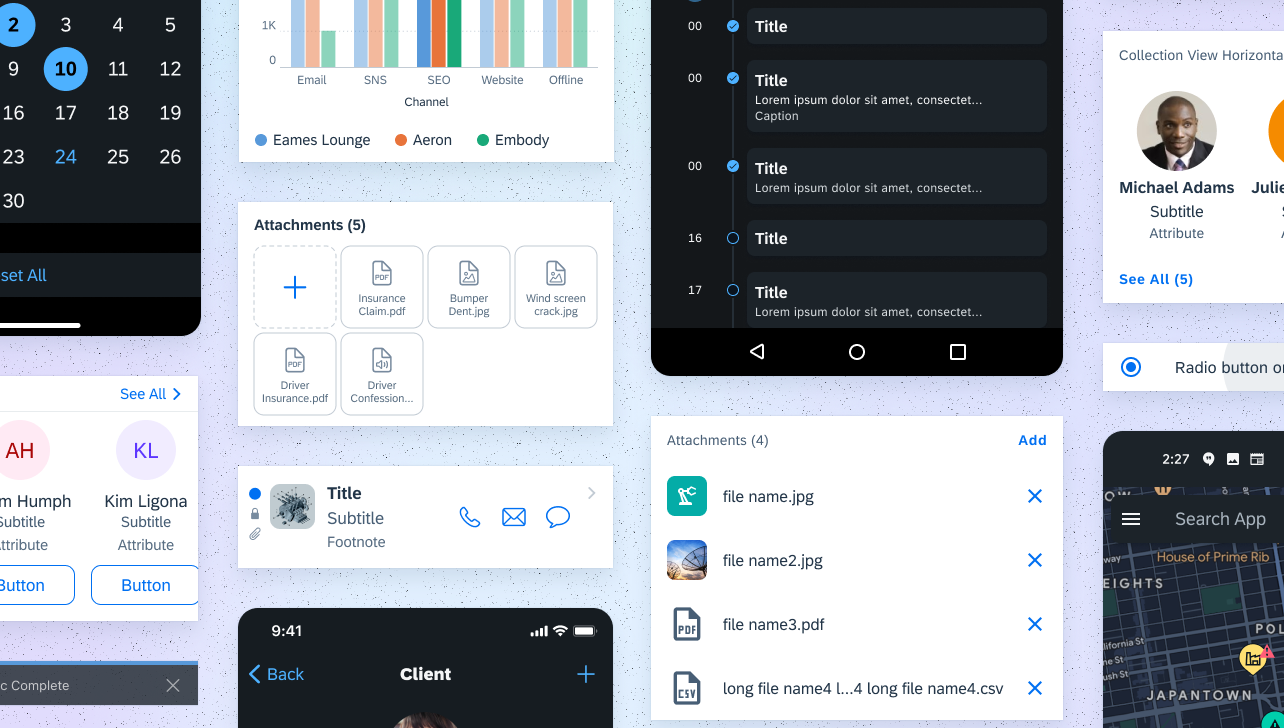

Shots of the android app used for user testing

Usability Issues

- Tethered to phones To trigger 'marks' (the time-stamped indexes) users needed to have their phone on hand and tap the mark floating action button, so they can now look away from their phone screens and instead look people in their eyes.

- Slow content digestion On playback, users had to go to the audio index and still listen to the audio to digest content.

My solution + some extra goodies

- Time-stamped Indexing Voice Command Users trigger 'marks' (the time-stamped indexes) with a simple voice command, so they can now look away from their phone screens and instead look people in their eyes. (Another solution may be to use IoT wearable devices i.e. smartwatches or rings as another form factor/interface)

- Audio Transcription Instead of listening to the playback, users can read or skim the transcription (a faster avg. speed by 150 wpm).

- Playback Speed Control With audio still having its benefits of recording sounds that can't be transcribed and capturing tones & subtextual information, users can speed audio playback up to x6 faster

Wireframes + Mockups + Prototypes

I, unfortunately, don't do too good of a job with always documenting my work, so I won't be able to share my sketches. However, I can show my initial wireframes of MarkThat v2 and the subsequent bad mockups of v3 which did not follow a structured design system (no adherence to a grid, color palette, font, etc.)

Using Sketch + Craft + Invision, I tried out my designs with a combination of "paper" digital prototyping and wizard of oz style user testing, which lead to further product feature additions and interactions in the final design.

Final Design + Decisions for Now

Using an 8 point soft grid and the lessons learned from v3, I designed v4 of MarkThat.

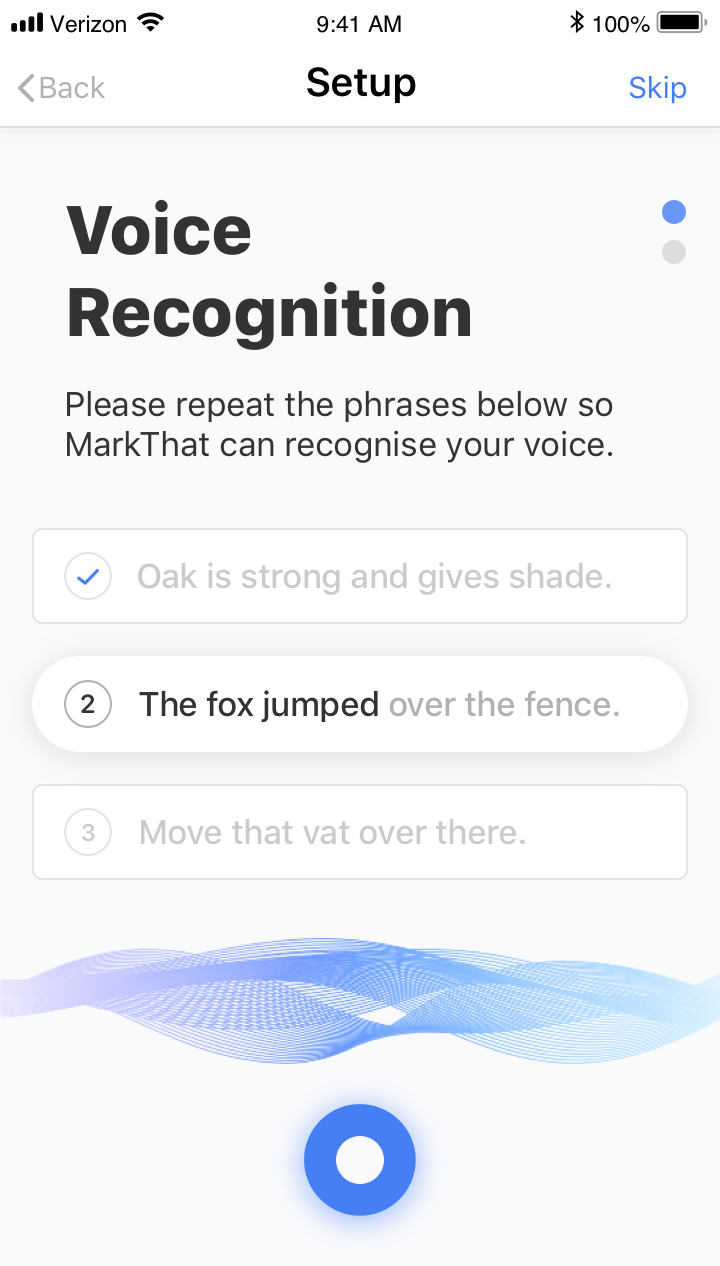

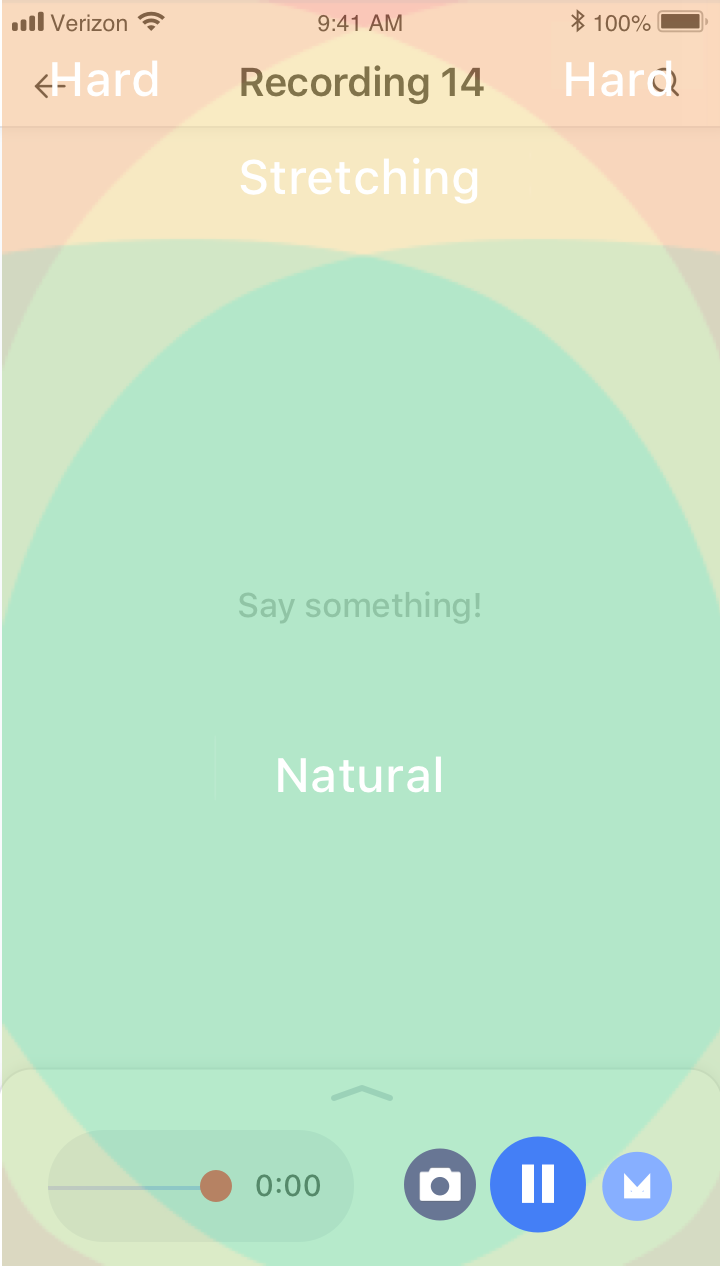

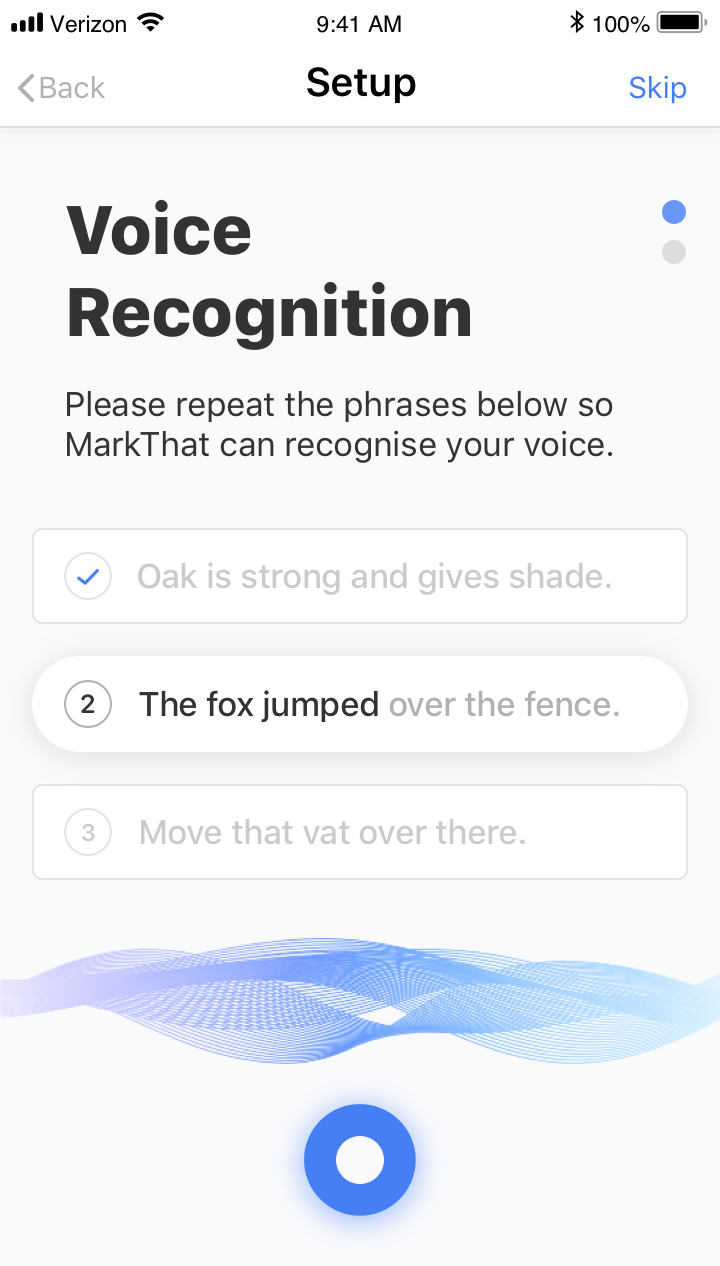

To set up personal voice recognition, the user needs to repeat the 3 Harvard phrases. After pressing the record button, the user only needs to repeat the select phrase as it automatically moves on to the next after recognizing completion.

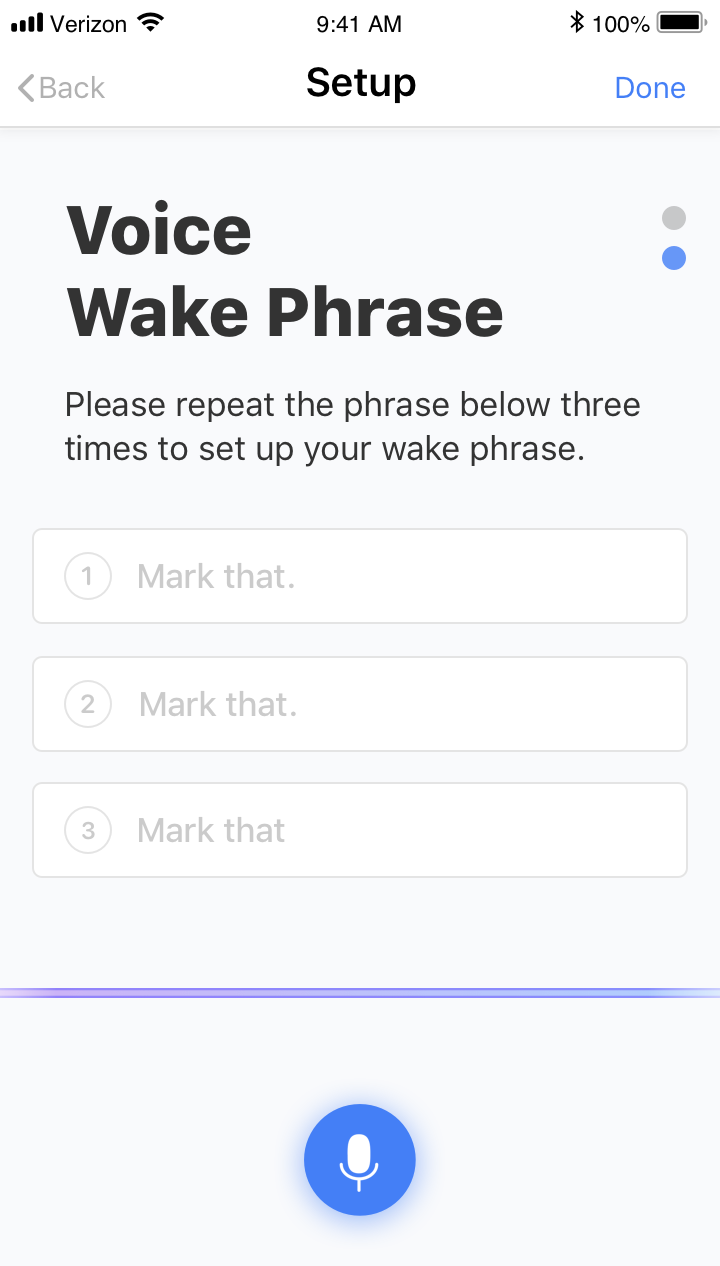

Last step of set up is repeating the default wake phrase 3 times, MarkThat's wake-word engine or algorithm can accurately detect the phrase "Mark that" from a stream of audio.

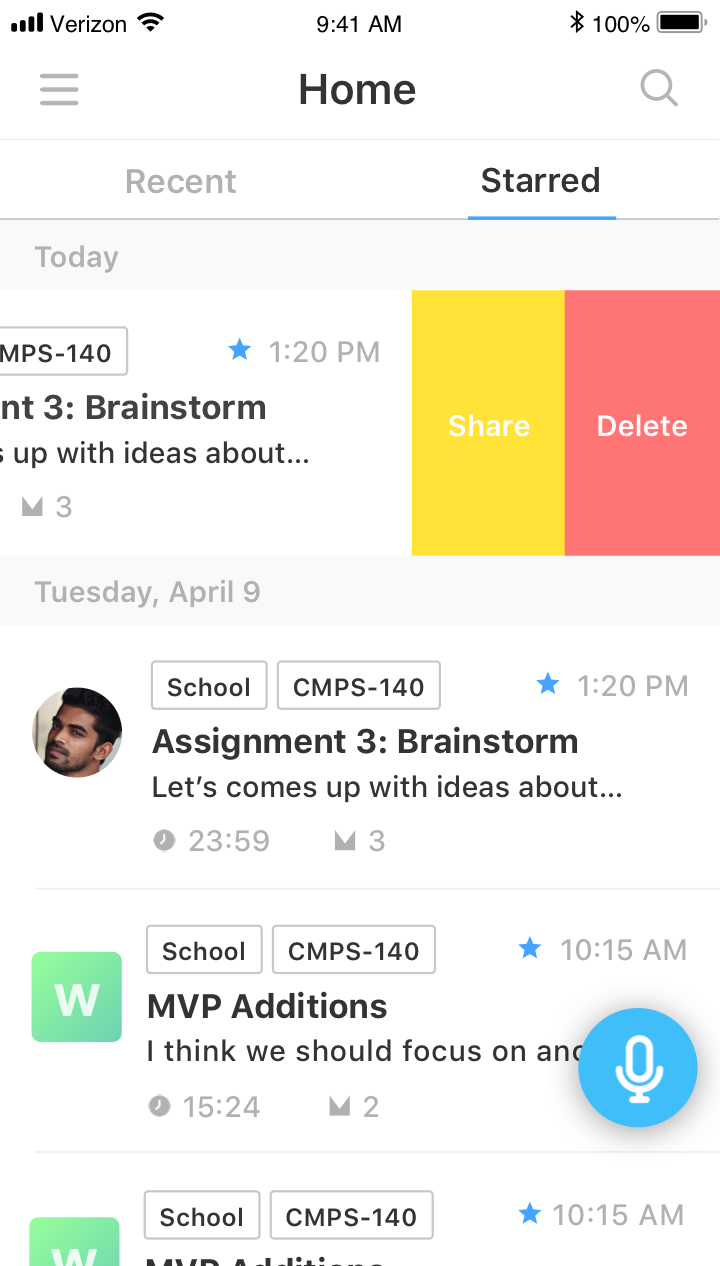

Users can quickly access their recent or starred conversations with the tab navigation bar.

Recordings are people centric, so that users can find conversations in a more natural way.

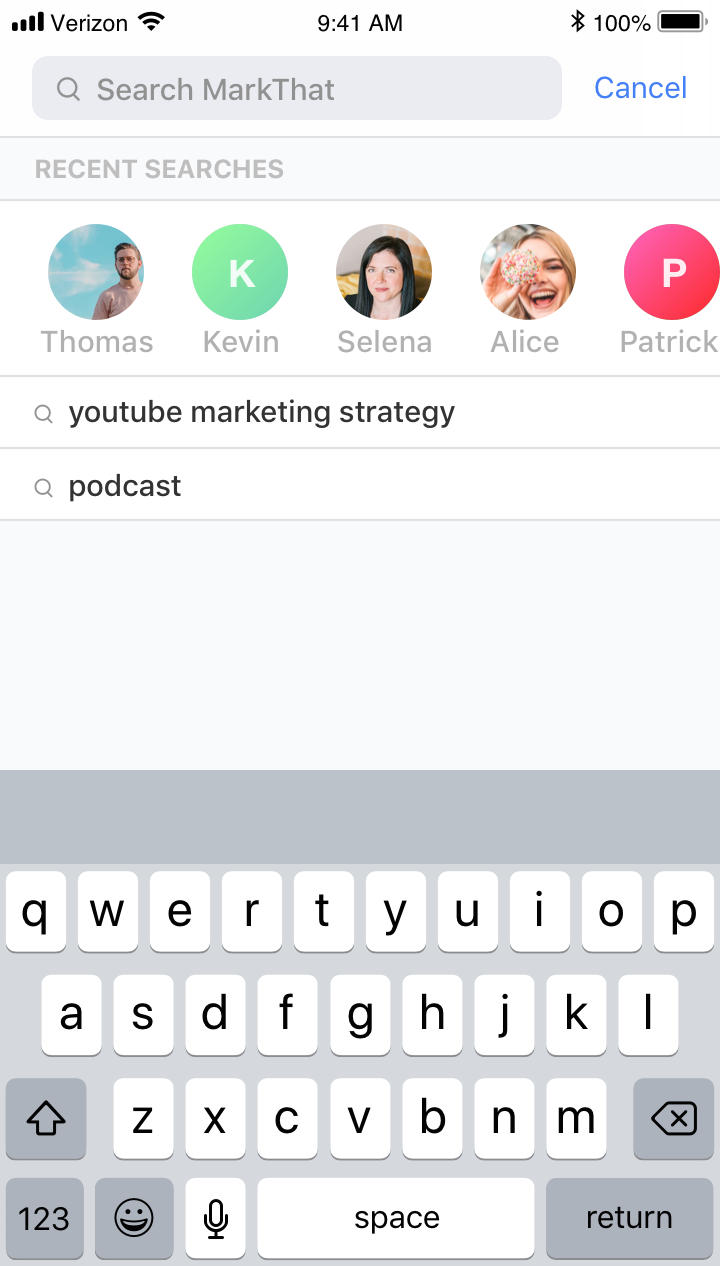

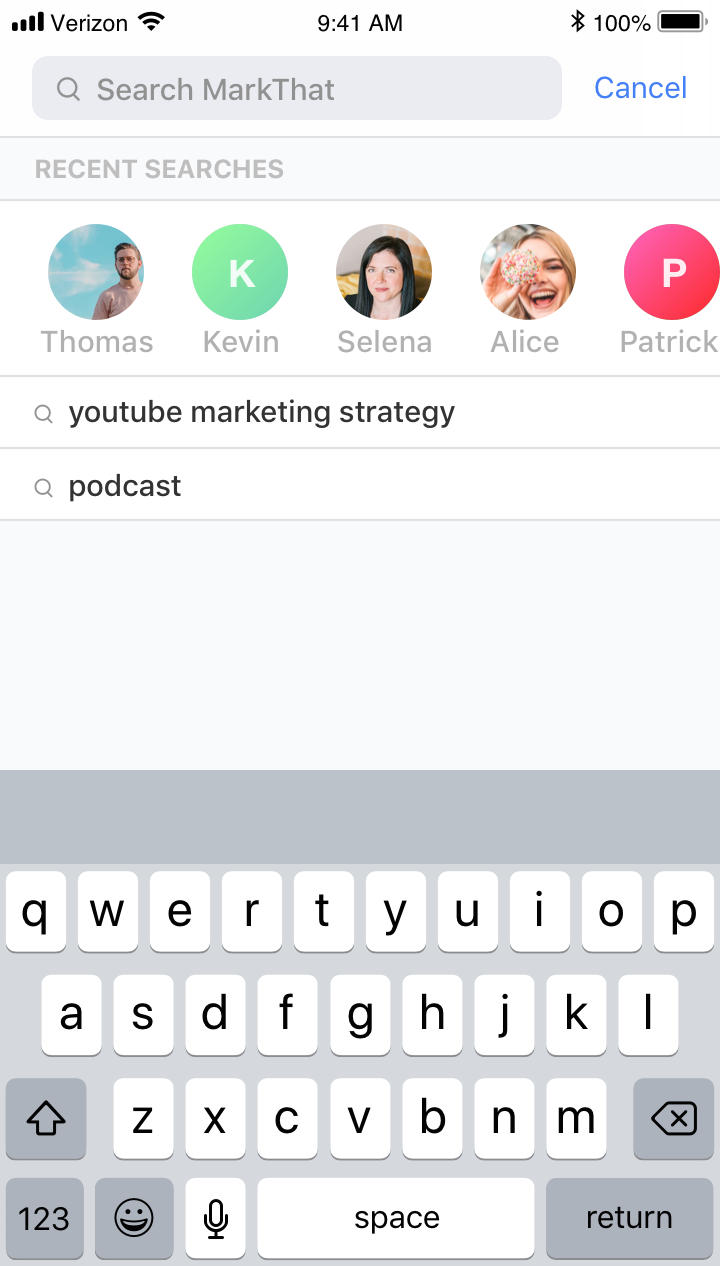

Users can search for a recording either by people involved in the conversation, tags, or title.

Shows most recent searches so that the user can easily find latest recordings.

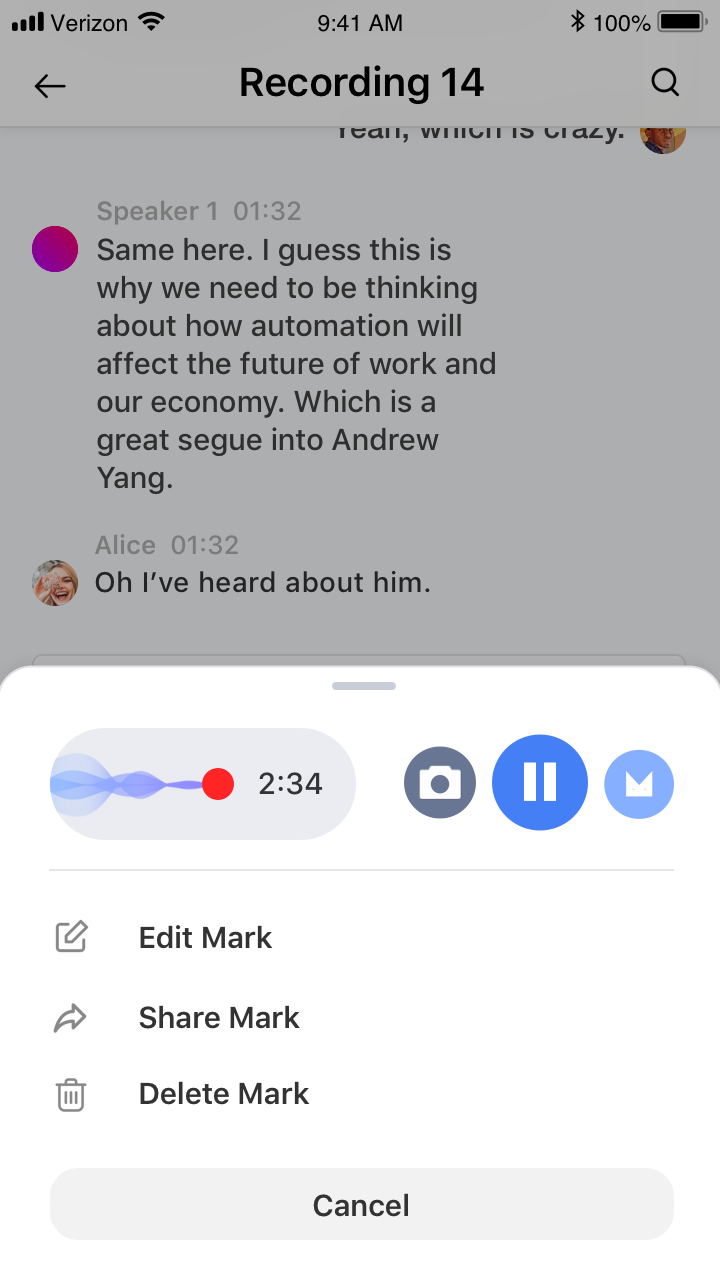

In my v3 iteration of the home screen, I thought using indication animation and the action gesture of swiping would be good for editing a recording; however, I realized that the swiping action would be also conflict with the swiping tab bar.

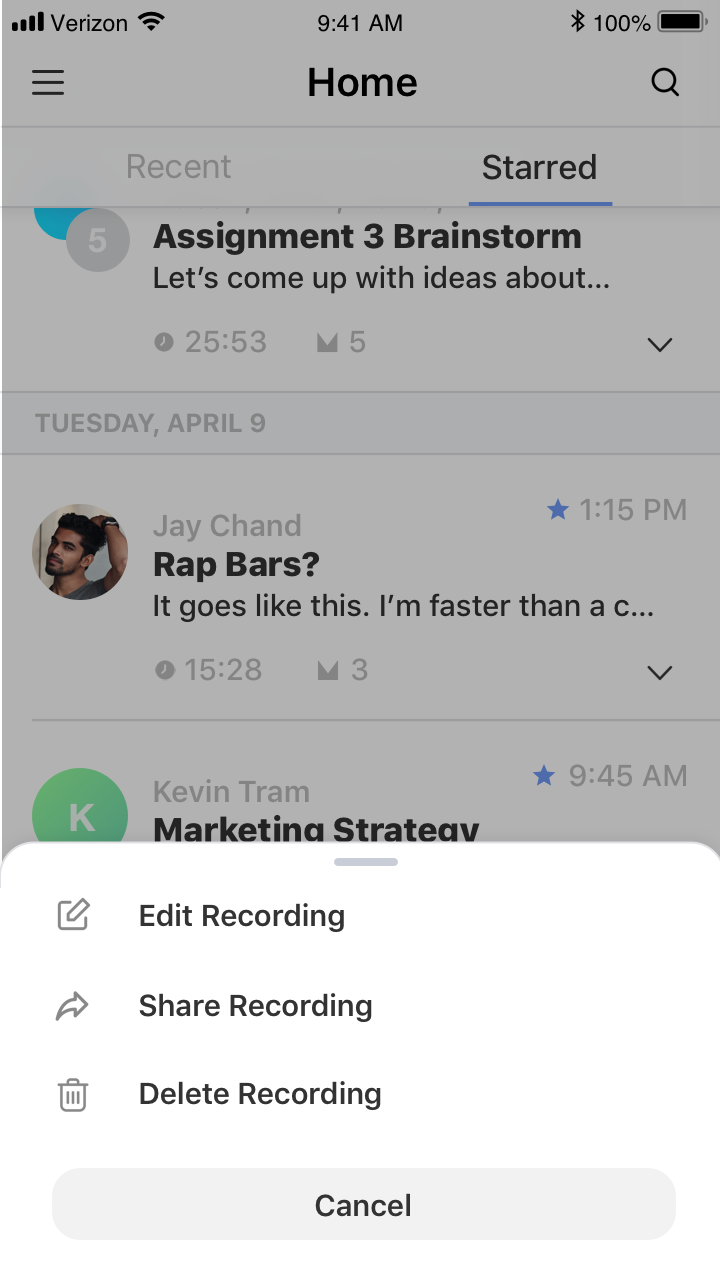

So, I decided on a simple tap on a "more options" icon.

On a separate note, I chose to do away with v3's tag and folder type display in v4 and have just names and profile images since our conversations are more naturally organizaed around people.

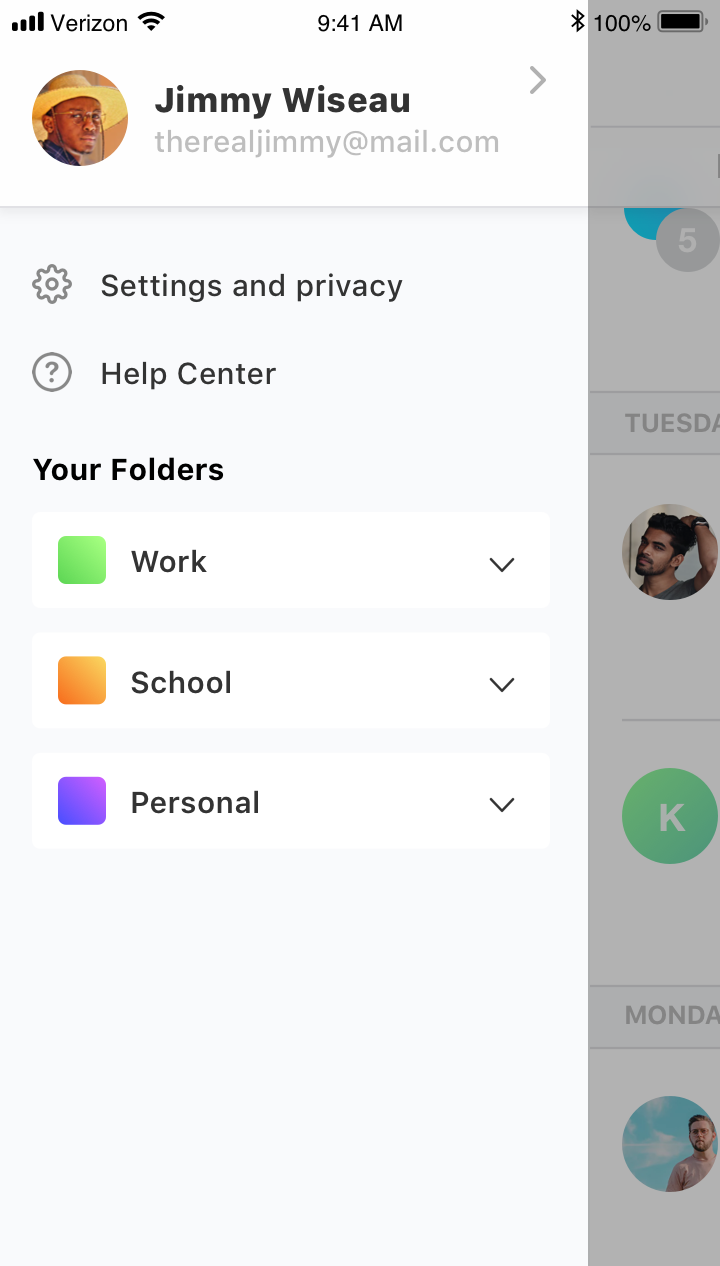

Opening the menu, lets a user access their settings (like account, profile, and voice controls).

Instead of having a bottom nav bar with a folders tab in the main screens, I chose to have a limited amount of folders (at least for the free ver.) to reduce clutter, while still allowing the user to organize recordings into folders

Users can start recording with a simple push of a button.

*Note: I was unable to prototype it in Orgiami, but upon recognizing audio MarkThat sound indicator should display soundwave like animations.

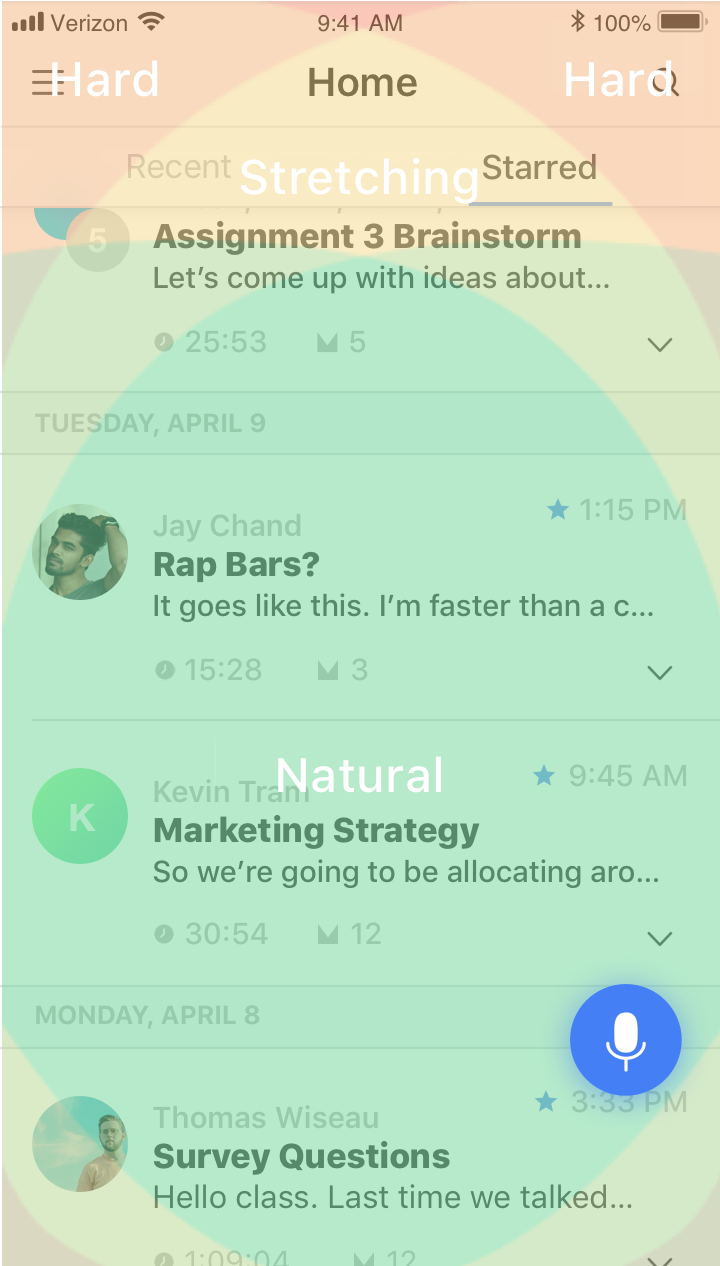

Using a combined right and left thumb map, I placed the recording button on the home screen within the "Natural" thumb threshold for ease of access.

Similarly, I also placed the main action buttons on the recording screen within the "Natural" thumb threshold. However, I might consider switching the mark button with the picture button since marking is more frequently used.

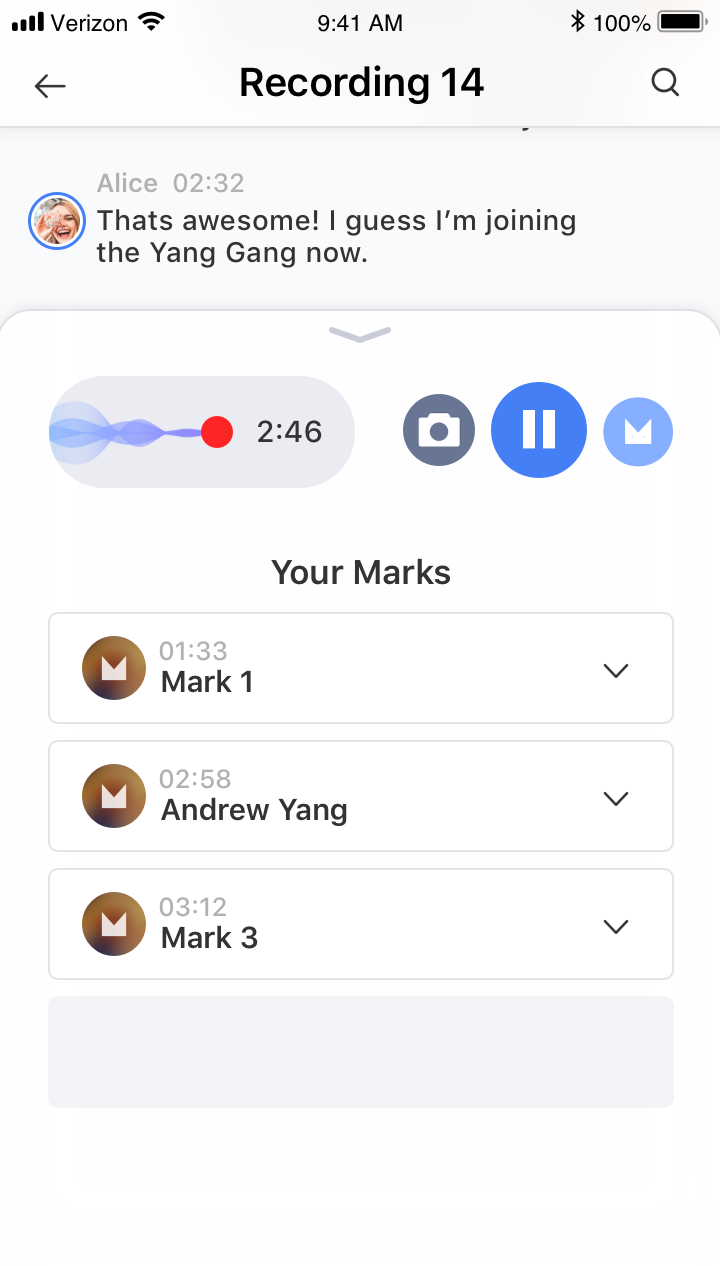

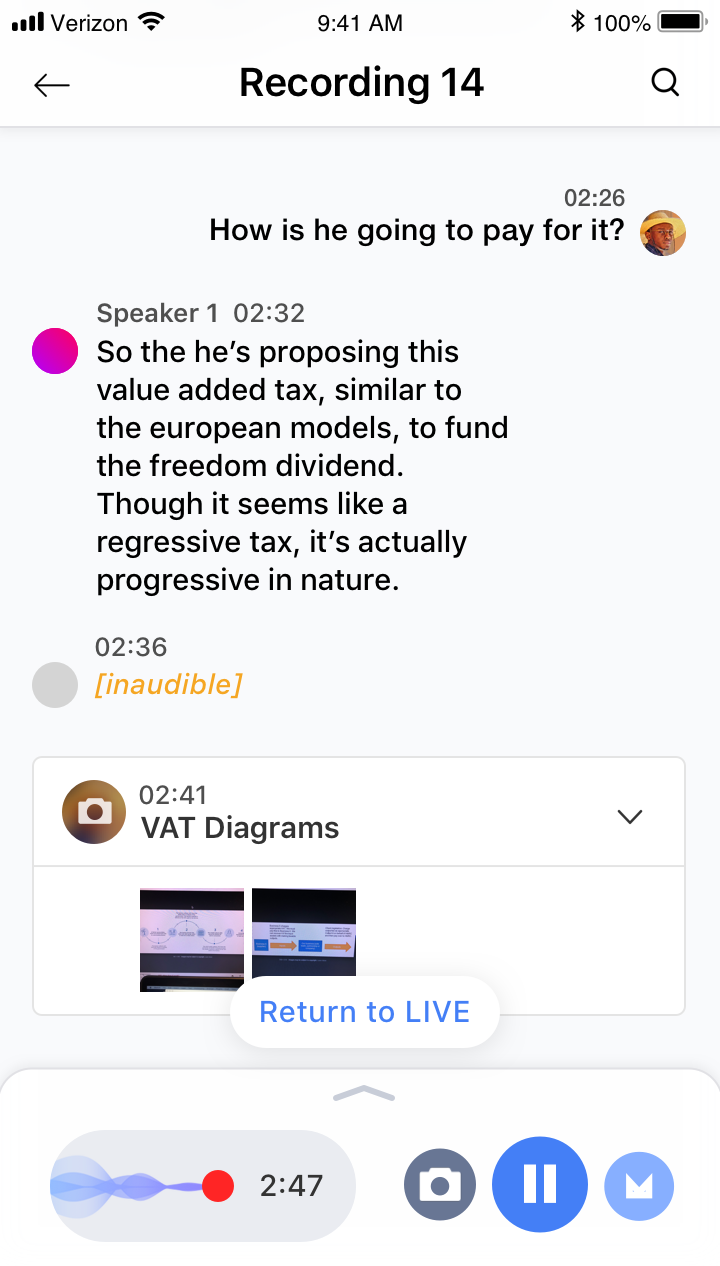

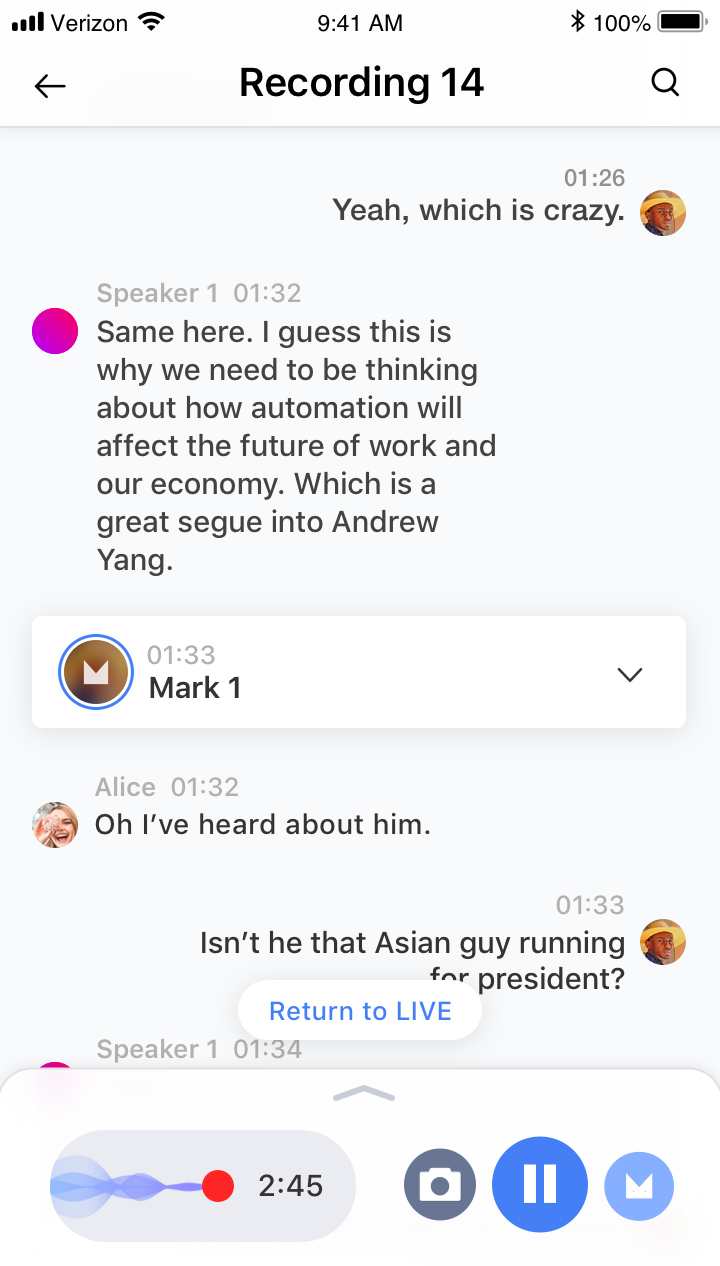

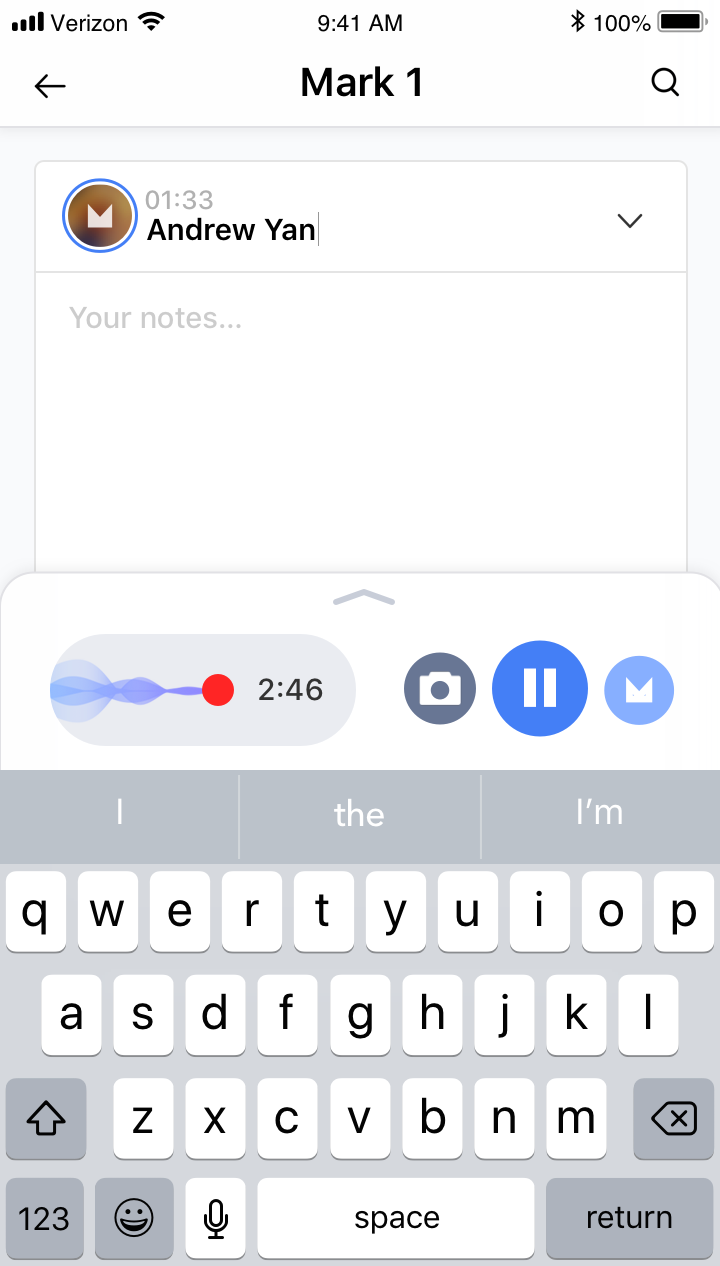

Users can place Marks with a tap or a voice command.

The voice control allows the users to be untetherd from their device and focus instead on the conversation.

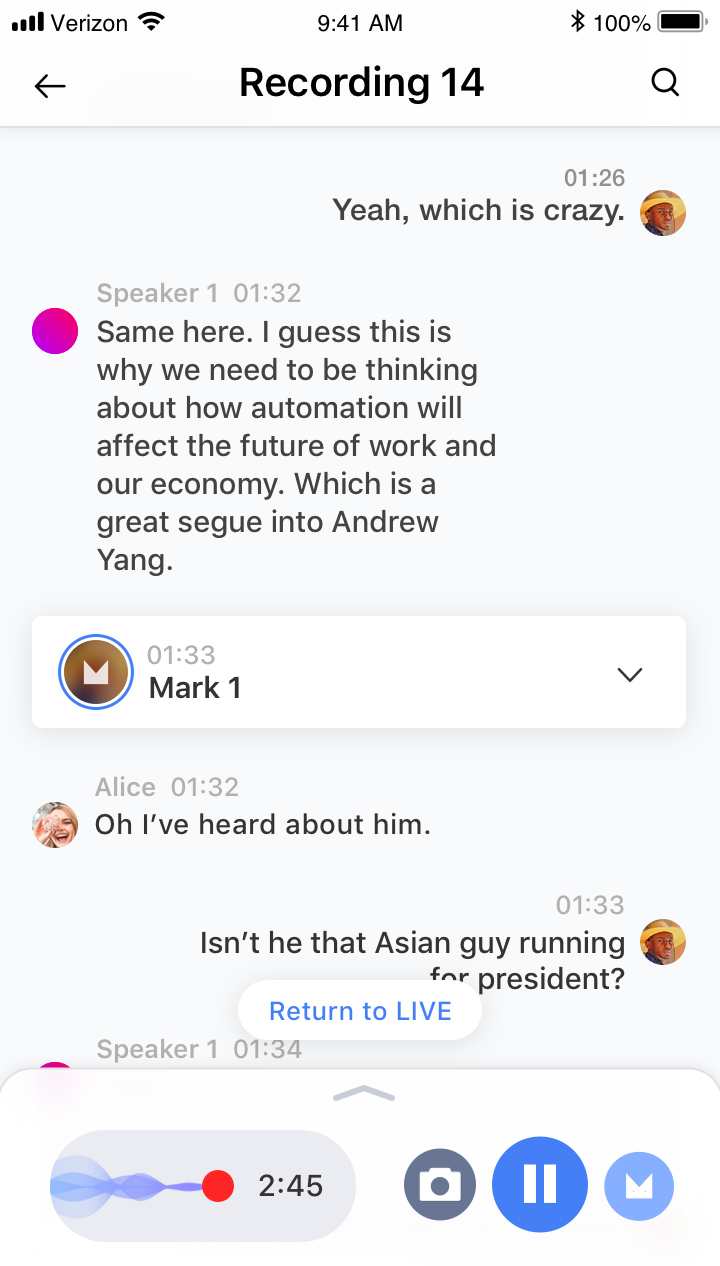

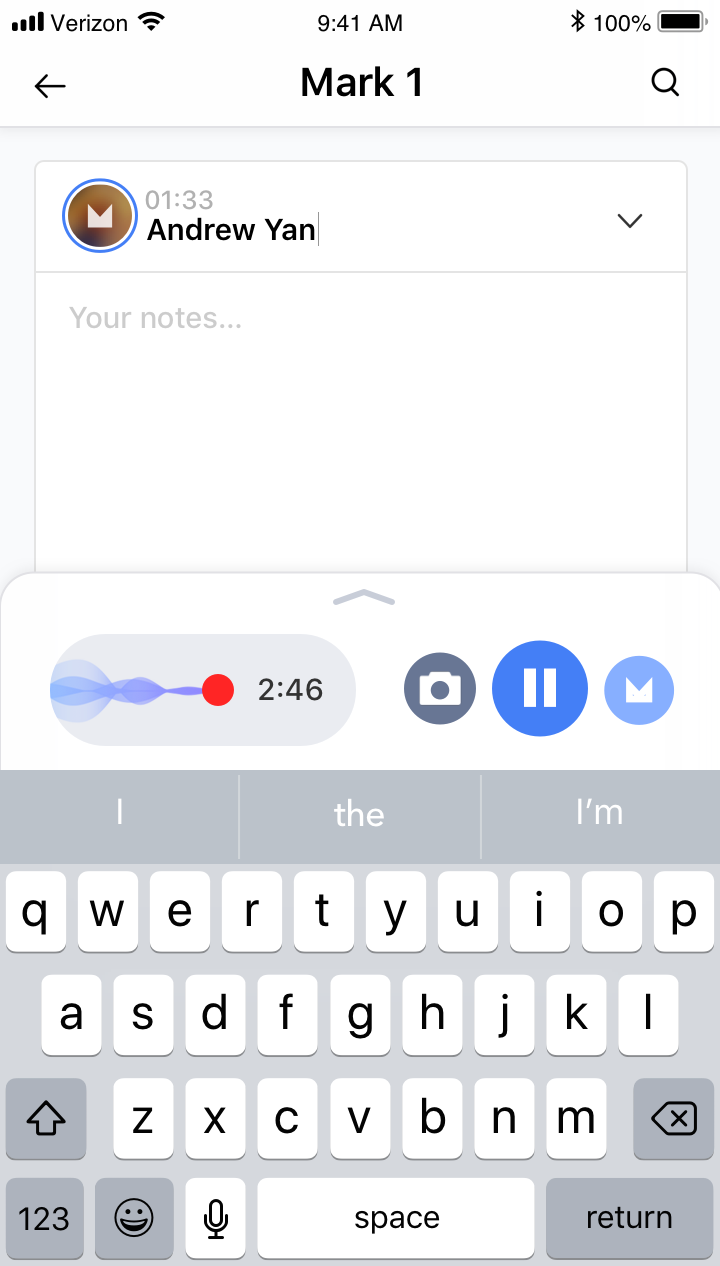

By tapping on a placed Mark, users can edit the Mark's title and/or add further notes.

This lets users both organize their Marks and write any ideas or notes that they didn't necessarily want to say out loud.

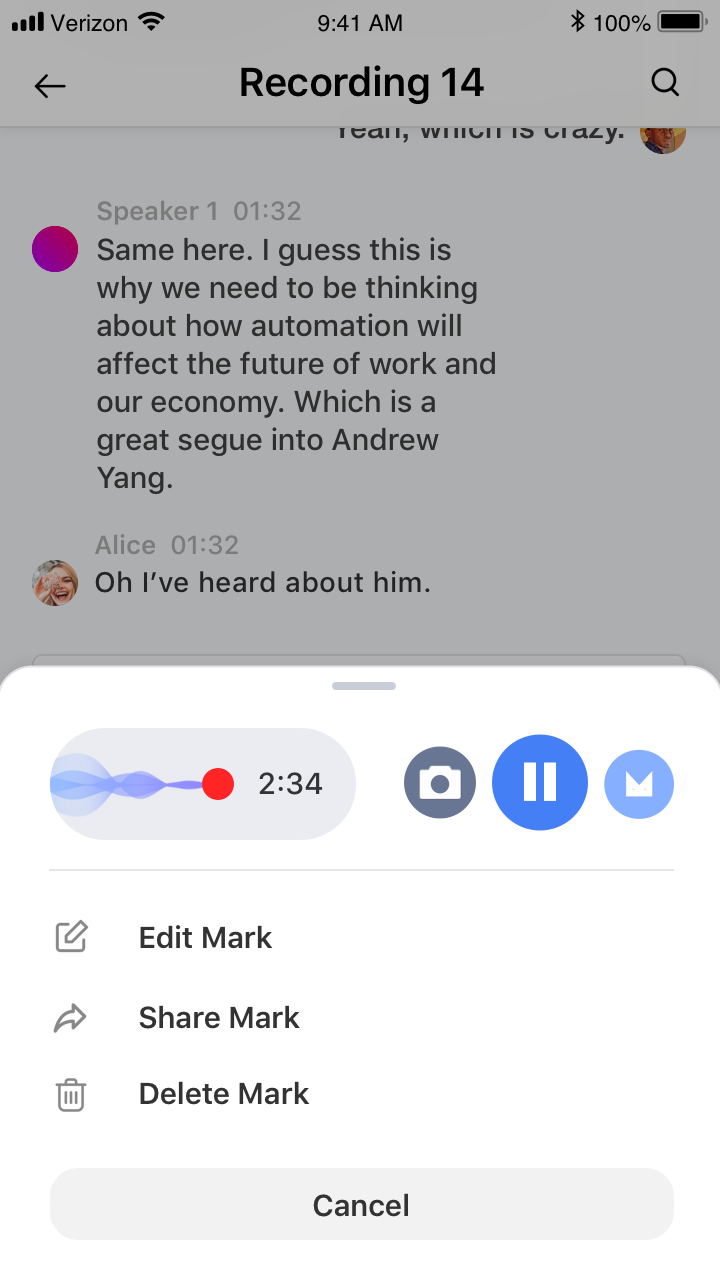

By tapping the downward facing chevron on a placed Mark, users can either edit, share, or delete the mark.

Users can skip to the time-stamped index by taping on the associated Mark.

Allows users to navigate around the recording quickly and easily.

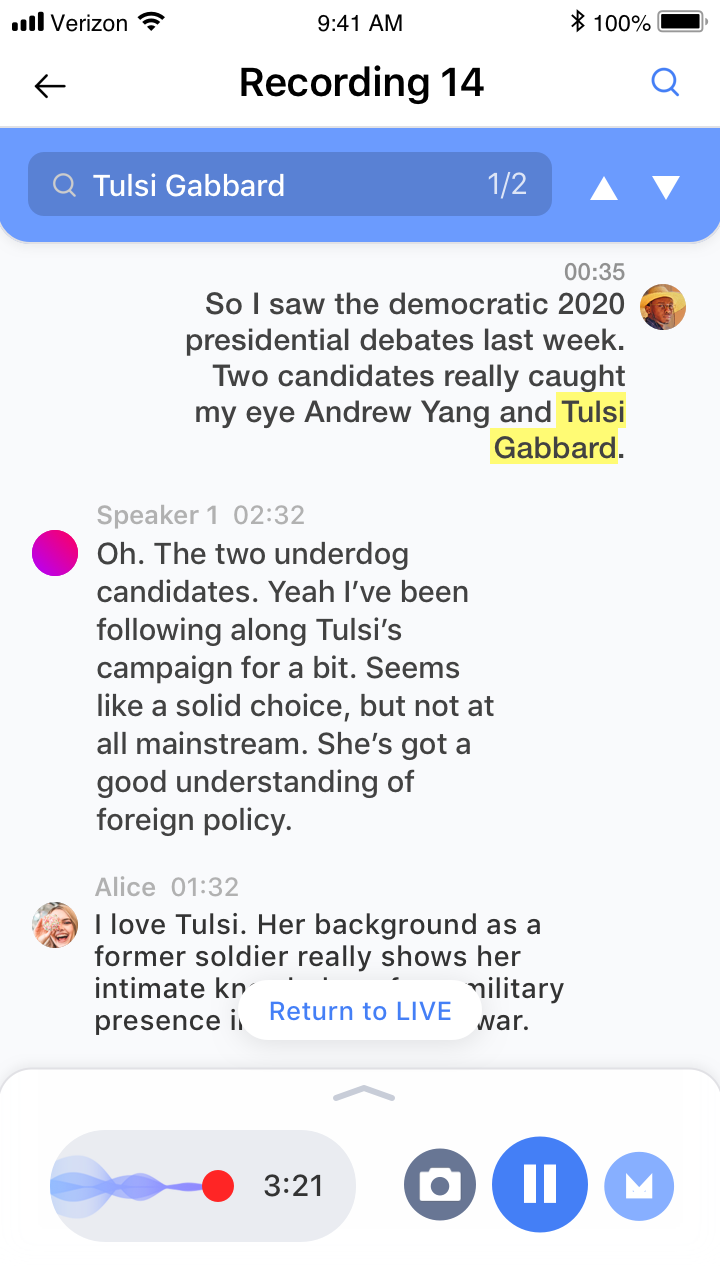

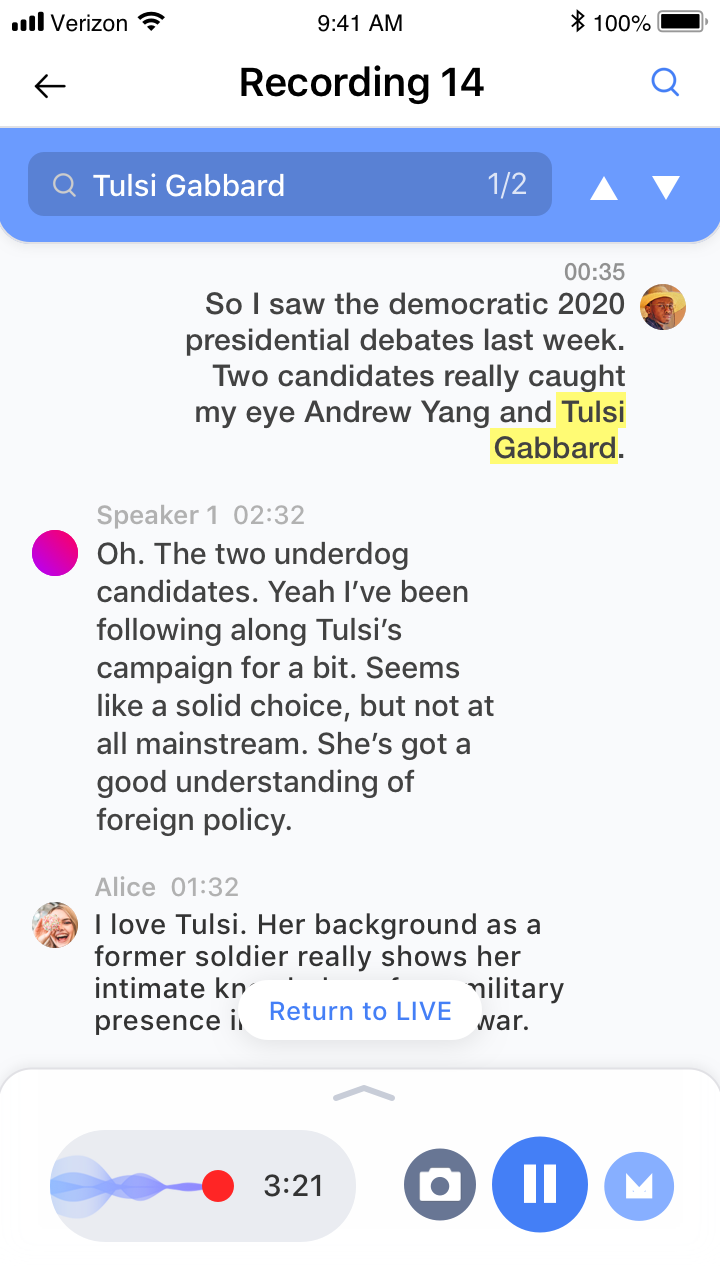

Users can search through the recording by keyword instance.

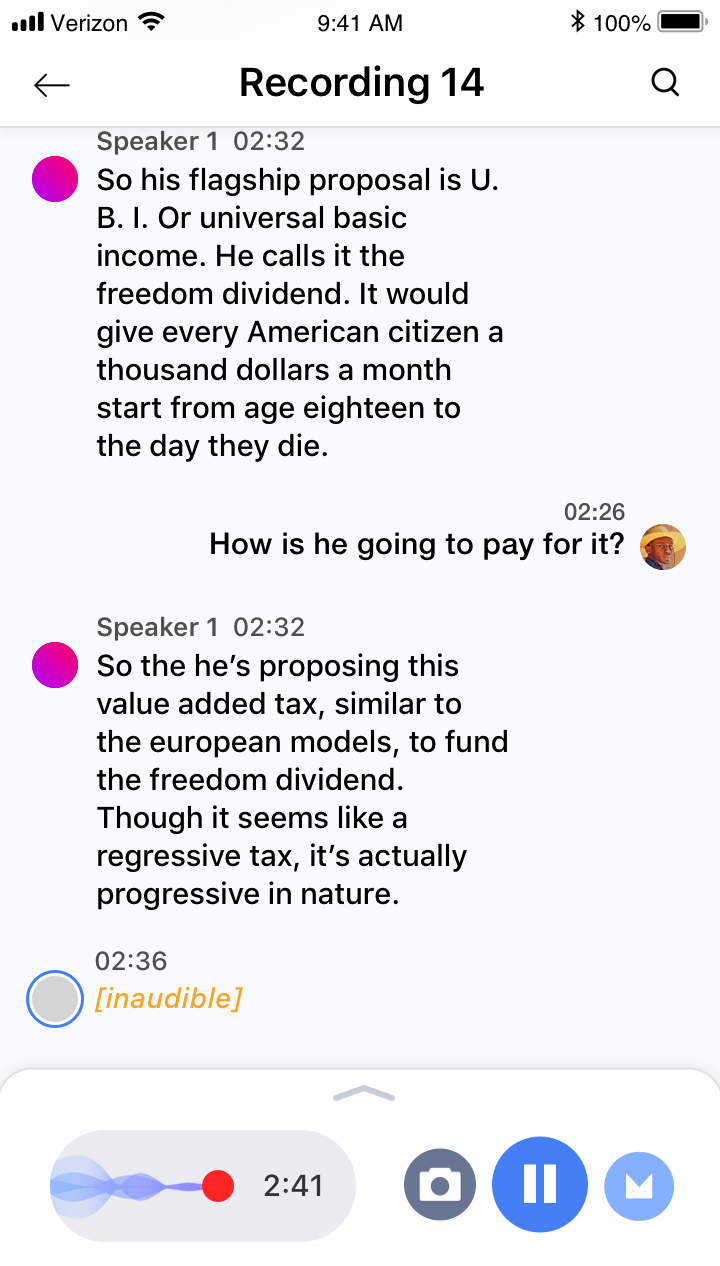

The blue border shows what is selected. Since MarkThat doesn't just do transcription, but also records actual audio, the "inaudible" text is a placeholder for inaudible words.

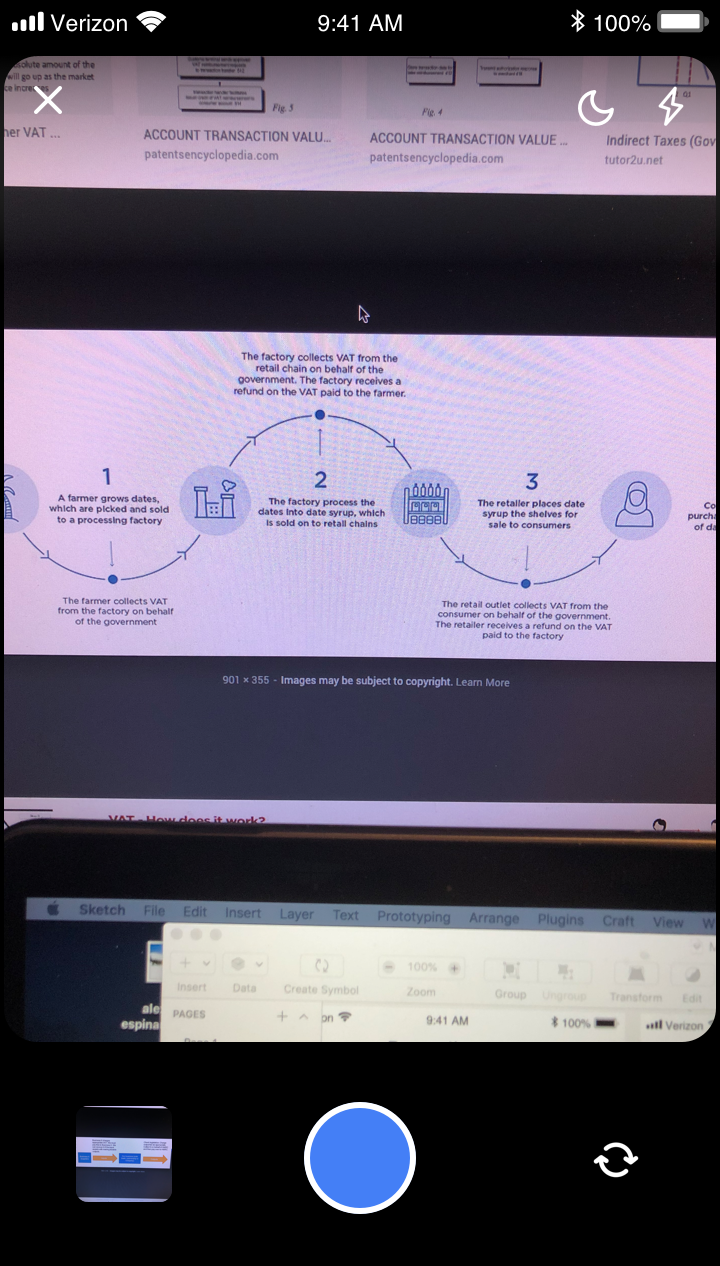

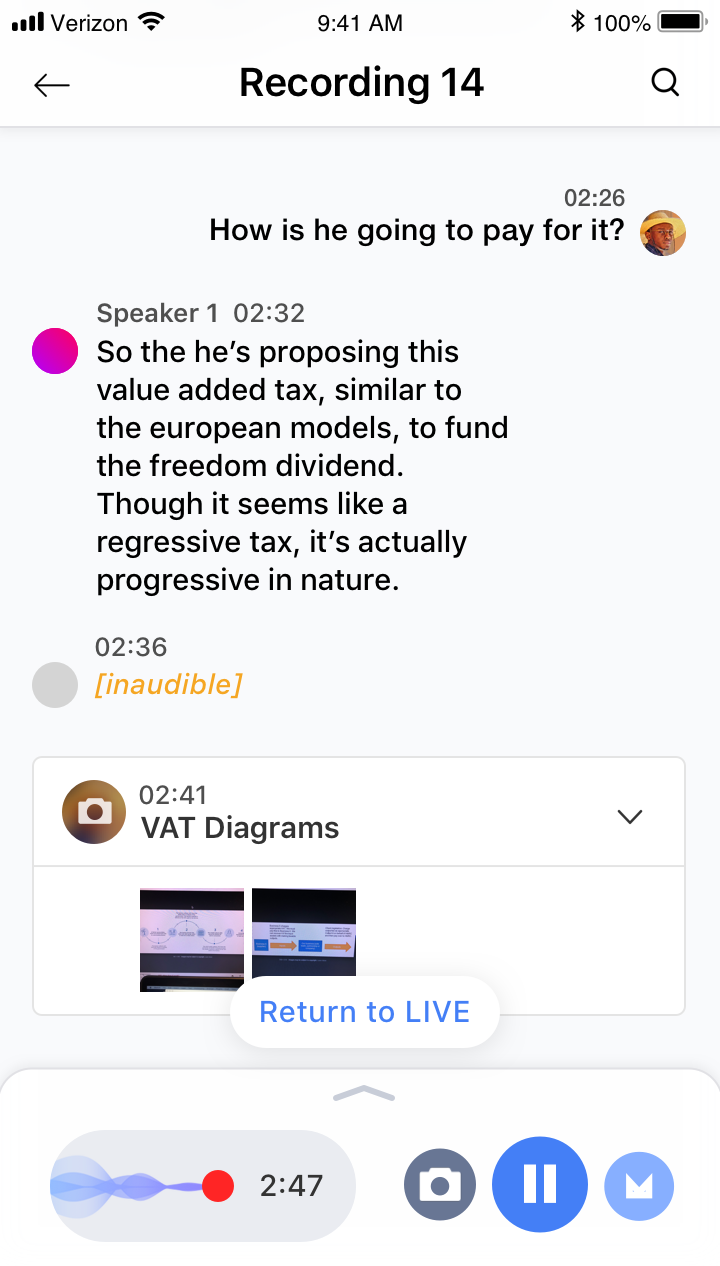

The user just needs to tap on the camera button to take a picture of something.

After pressing the camera button, the user is taken to the camera screen where they can take pictures with flash, nightmode, or back and front facing cameras.

Tap on the taken images on the bottom left to finish.

The recording will remain uninterrupted while you are taking pictures.

Tap on return to live to scroll down to live view.

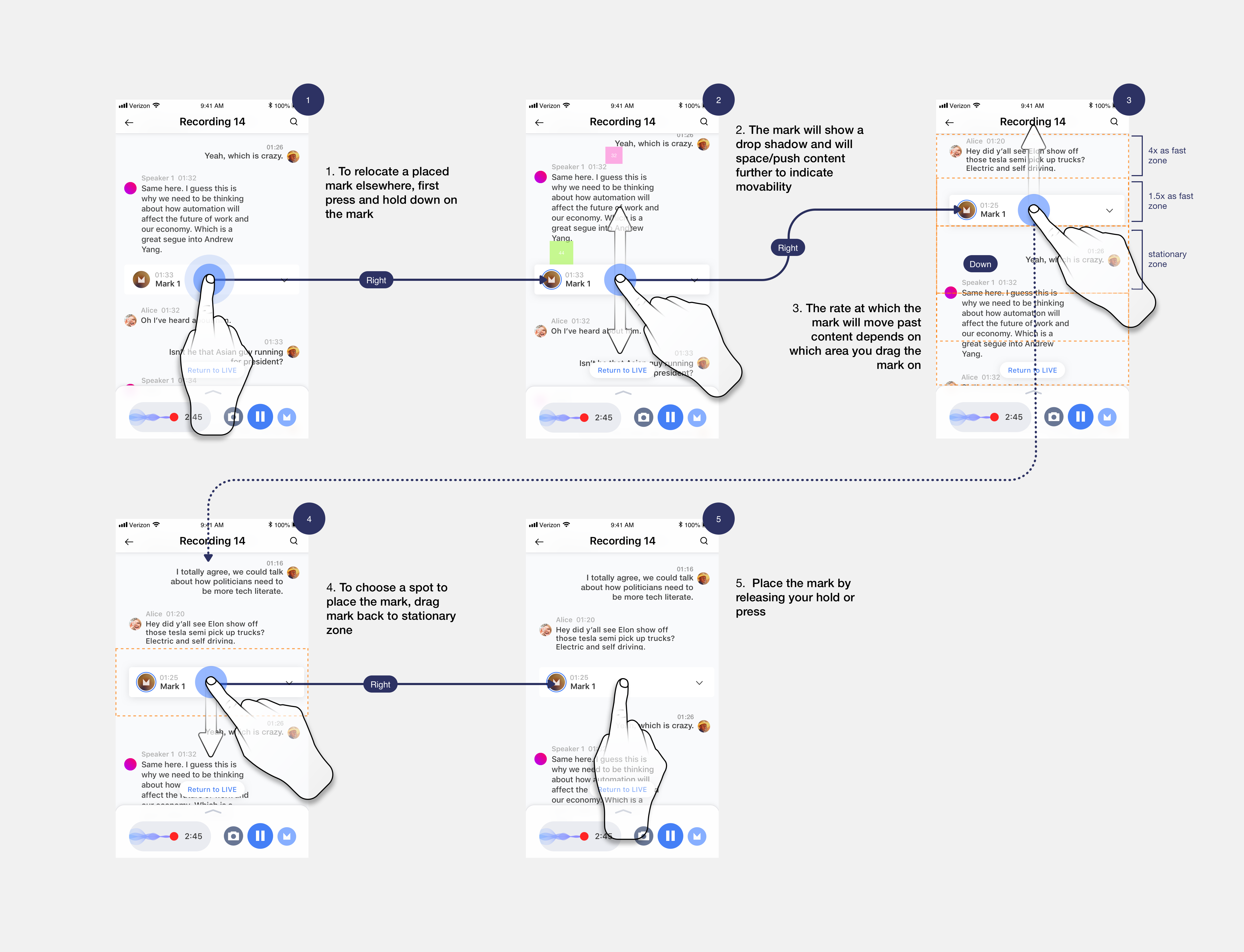

Moving Marks

A user manually placing marks by hand or voice isn't always going to be accurate. So to account for mistaken or off mark placements, users can easily move their marks around the recording with just a long press, drag, and then drop. I choose this form of interaction because 1) a user’s touch should directly control the movement of elements and 2) it was the most elegant and intuitive solution I could come up with for the action.

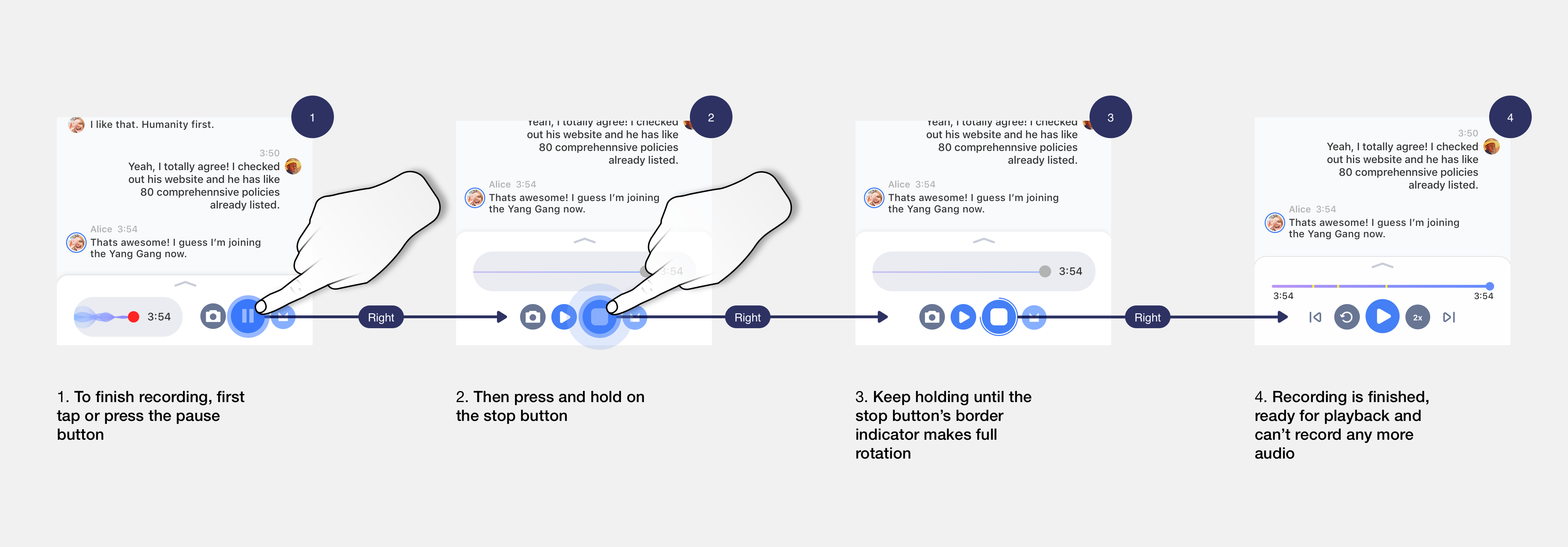

Ending Recording

To prevent accidental or premature recording finishes, users have to first pause the recording and then do a long press on the end button until the circular border indicator makes a complete rotation.

After ending the recording, user can edit or view the recording information.

Using TLDR tech, MarkThat generates a recordin summary and tags.

If the user wants to add their own tags, the user can type in their own or continue to choose tags from generated list based off of frequency of mentions.

Changes made will then be applied to recording information.

MarkThat

Designs for now

Font and colors

Chose to go with SF Pro to emulate an aesthetic akin to Apple's since I wanted users to feel semi-consistent visuals when used along with Siri shortcuts in the future.

Main Features

Below are just a few of the key features I designed, to see the rest please go to the 'Generate & Evaluate' section and click on 'view more'.

Setup voice recognition for personalized voice commands and multi-party conversations.

Switch between recent and starred conversation views.

Search by person, conversation, Mark, or phrase.

Record and transcribe your conversation with a simple tap.

Place Marks to index key parts of the conversation for later visit.

Add further notes to your Marks.

Edit, share, or delete your Marks.

Lookup specific parts of your conversation with keyword search

Add images from your camera or camera roll.

View and manage your conversation's recording general info.

Conclusion?

Challenges, takeaways, and next steps

My biggest hurdle is user testing the transcription + voice command features. It's difficult to create a design prototype that can full convey working transcription along with voice. So instead, I had to use Dialogflow (formerly API.AI), Google's live Transcribe, Android Studio, and some manual simulating to test the effectiveness of said features. Also, the lack of a team meant I had to rely on bouncing my ideas off of friends and kind strangers.

My takeaways are:

- No matter how small the project, start with creating an organized sketch file and design system.

It may seem tedious, but in the long run, it will be much more practical - Spend more time on defining the problem.

Having a good grasp on the problem will always be better than half hazard going into possible solutions - Create versatile products

Not everybody is the same, by building products and services that have a variety of ways to interact you can make things more accessible.

This project is still 'In Progress', I will continue with user testing and improving multiple speaker detection. I also want to look into introducing more trigger words or voice actions so that MarkThat can schedule meeting times, set reminders & tasks, and clarify words/terms. Integration with Siri shortcuts is also an avenue of exploration.

Thanks for reading! If you want to be a part of MarkThat, give feedback, or just tell me your deepest darkest secret, shoot me an email at [email protected]

My Projects

MarkThat: Voice Powered NotesProduct Design, iXD, Prototyping

Spotify Podcast RedesignProduct Design, iXD, UI Design, Prototyping

Fiori Design System and more @SAPProduct Design, iXD, UI Design, Prototyping

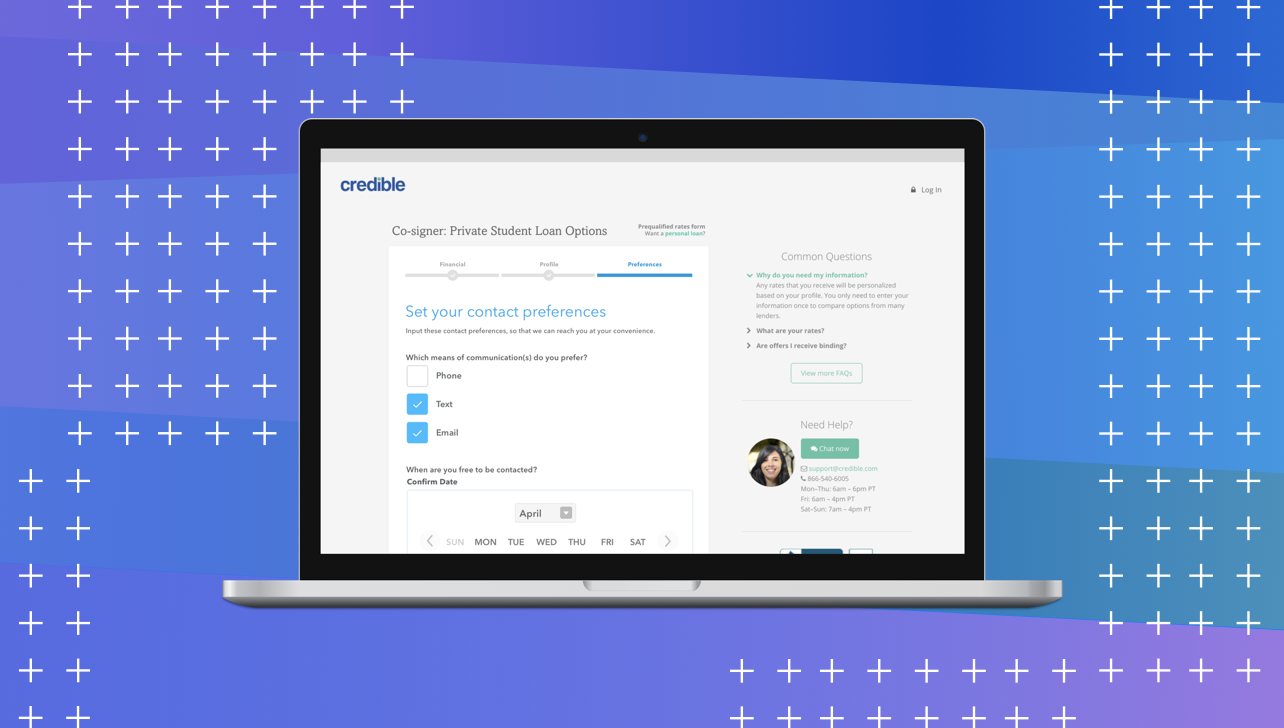

Credible — Contact PreferencesProduct Design, iXD, User Research

UCSC HCI Lab — EmotiCalResearch, UX/UI Design, Development

Voice @Yahoo!VUI Design, UX Research, Prototyping